Data Wrangling

- Weekdays

- Menstrual phase

- Fertility estimation

- Contraception

- Singles vs couples

- Living situation

- Merge surveys

- Code open answer

- cycle length

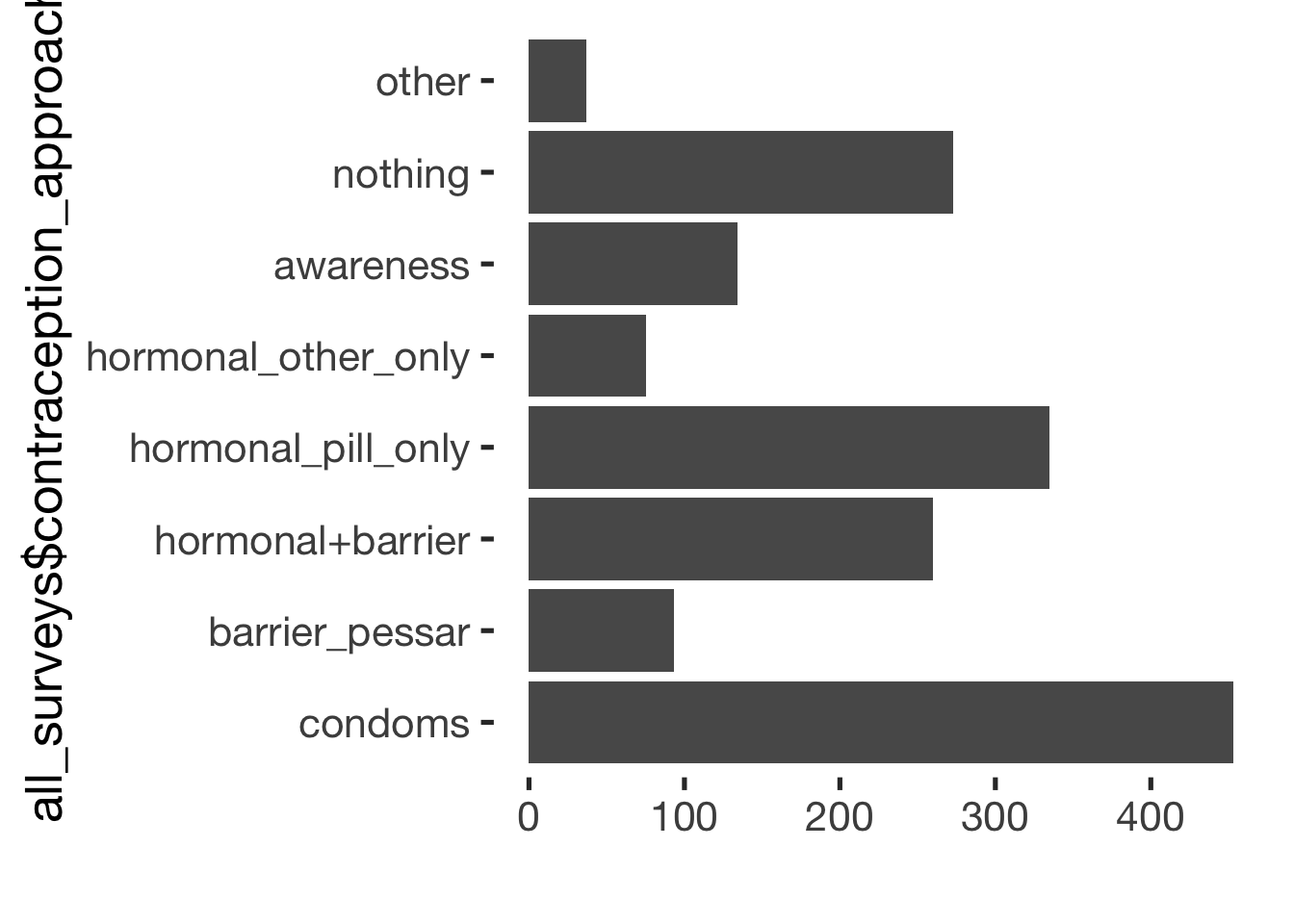

- choice of contraception

- Fix variables

- Fertility awareness

- Merge diary

- Singles

- network

- Code open answers diary

- Social diary

- Sex dummy variables

- Final edits

- Sanity checks

- save

source("0_helpers.R")

library(tidylog)

knitr::opts_chunk$set(error = FALSE)

load("data/pretty_raw.rdata")

knit_print.alpha <- knitr:::knit_print.default

registerS3method("knit_print", "alpha", knit_print.alpha)Weekdays

s3_daily$weekday = format(as.POSIXct(s3_daily$created), format = "%w")

s3_daily$weekend <- ifelse(s3_daily$weekday %in% c(0,5,6), 1, 0)

s3_daily$weekday <- car::Recode(s3_daily$weekday, "0='Sunday';1='Monday';2='Tuesday';3='Wednesday';4='Thursday';5='Friday';6='Saturday'",as.factor =T, levels = c('Monday','Tuesday','Wednesday','Thursday','Friday','Saturday','Sunday'))

hour_string_to_period = function(hour_string) {

duration(as.numeric(stringr::str_sub(hour_string, 1,2)), units = "hours") + duration(as.numeric(stringr::str_sub(hour_string, 4,5)), units = "minutes")

}

s3_daily$sleep_awoke_time = hour_string_to_period(s3_daily$sleep_awoke_time)

s3_daily$sleep_fell_asleep_time = hour_string_to_period(s3_daily$sleep_fell_asleep_time)

s3_daily$sleep_duration = ifelse(

s3_daily$sleep_awoke_time >= s3_daily$sleep_fell_asleep_time,

s3_daily$sleep_awoke_time - s3_daily$sleep_fell_asleep_time,

dhours(24) - s3_daily$sleep_fell_asleep_time + s3_daily$sleep_awoke_time

) / 60 / 60## Note: method with signature 'Duration#ANY' chosen for function '-',

## target signature 'Duration#Duration'.

## "ANY#Duration" would also be valids3_daily = s3_daily %>%

mutate(created_date = as.Date(created - hours(10))) %>% # don't count night time as next day

group_by(session) %>%

mutate(first_diary_day = min(created_date)) %>%

ungroup()## mutate: new variable 'created_date' with 325 unique values and 0% NA## group_by: one grouping variable (session)## mutate (grouped): new variable 'first_diary_day' with 208 unique values and 0% NA## ungroup: no grouping variablesstopifnot(s3_daily %>% drop_na(session, created_date) %>%

group_by(session, created_date) %>% filter(n()>1) %>% nrow() == 0)## drop_na: no rows removed## group_by: 2 grouping variables (session, created_date)## filter (grouped): removed all rows (100%)Menstrual phase

## mutate: new variable 'ended_date' with 216 unique values and 3% NA# s1_demo %>%

# filter(menstruation_last < ended_date - days(40)) %>%

# select(menstruation_last, ended_date, menstruation_last_certainty, contraception_method)

s1_menstruation_start = s1_demo %>% filter(!is.na(menstruation_last)) %>%

filter(menstruation_last >= ended_date - days(40)) %>% # only last menstruation that weren't ages ago

mutate(created_date = as.Date(created)) %>%

select(session, created_date, menstruation_last) %>% rename(menstrual_onset_date_inferred = menstruation_last)## filter: removed 355 rows (21%), 1,305 rows remaining## filter: removed 19 rows (1%), 1,286 rows remaining## mutate: new variable 'created_date' with 202 unique values and 0% NA## select: dropped 103 variables (created, modified, ended, expired, info_study, …)## rename: renamed one variable (menstrual_onset_date_inferred)s5_hadmenstruation = s5_hadmenstruation %>%

filter(!is.na(last_menstrual_onset_date)) %>%

mutate(created_date = as.Date(created)) %>%

select(session, created_date, last_menstrual_onset_date) %>% rename(menstrual_onset_date_inferred = last_menstrual_onset_date) %>%

filter(!duplicated(session))## filter: removed 127 rows (22%), 443 rows remaining## mutate: new variable 'created_date' with 153 unique values and 0% NA## select: dropped 6 variables (created, modified, ended, expired, had_menstrual_bleeding, …)## rename: renamed one variable (menstrual_onset_date_inferred)## filter: removed one row (<1%), 442 rows remaining##

## FALSE

## 442Fertility estimation

LH surges and sex hormones

lab = readxl::read_xlsx("data/Datensatz_Zyklusstudie_Labor.xlsx")

lab = lab %>%

rename(created_date = `Datum Lab Session`) %>%

filter(!is.na(`VPN-CODE`), !is.na(created_date)) %>%

mutate(short = str_sub(Tagebuchcode, 1, 7),

lab_only_no_diary = is.na(short),

short = if_else(is.na(short), `VPN-CODE`, short),

created_date = as.Date(created_date),

`Date LH surge` = as.Date(if_else(`Date LH surge` == "xxx", NA_real_, as.numeric(`Date LH surge`)), origin = "1899-12-30")) # some excel problem, where nrs are repeated at end, so we shorten it## rename: renamed one variable (created_date)## filter: removed 37 rows (6%), 628 rows remaining## mutate: converted 'created_date' from double to Date (0 new NA)## converted 'Date LH surge' from character to Date (33 new NA)## new variable 'short' with 157 unique values and 0% NA## new variable 'lab_only_no_diary' with 2 unique values and 0% NAlab %>% mutate(n_women = n_distinct(`VPN-CODE`),

n_diary_participants = n_distinct(str_sub(Tagebuchcode, 1, 7), na.rm = T)) %>%

group_by(n_women,n_diary_participants, `VPN-CODE`) %>%

summarise(days = n(), surges = n_nonmissing(`Date LH surge`)) %>%

select(-`VPN-CODE`) %>%

summarise_all(mean)## mutate: new variable 'n_women' with one unique value and 0% NA## new variable 'n_diary_participants' with one unique value and 0% NA## group_by: 3 grouping variables (n_women, n_diary_participants, VPN-CODE)## summarise: now 159 rows and 5 columns, 2 group variables remaining (n_women, n_diary_participants)## select: dropped one variable (VPN-CODE)## summarise_all: now one row and 4 columns, one group variable remaining (n_women)## # A tibble: 1 x 4

## n_women n_diary_participants days surges

## <int> <int> <dbl> <dbl>

## 1 159 149 3.95 1.77## [1] 8## [1] 19## [1] 1235# setdiff(lab$short, s1_demo$short_demo) %>% unique() # all codes found

lab <- lab %>% filter(!lab_only_no_diary) %>% select(-lab_only_no_diary)## filter: removed 32 rows (5%), 596 rows remaining## select: dropped one variable (lab_only_no_diary)## select: dropped 152 variables (created, modified, ended, expired, browser, …)## filter: removed all rows (100%)## inner_join: added 51 columns (VPN-CODE, Tagebuchcode, VPN-Zahl, created_date, Uhrzeit, …)## > rows only in x (1,235)## > rows only in y ( 44)## > matched rows 552 (includes duplicates)## > =======## > rows total 552## filter: removed all rows (100%)## filter: removed all rows (100%)s3_daily <- s3_daily %>% full_join(lab %>% select(-`Date LH surge`, -`Menstrual Onset`), by = c("session", "short", "created_date"), suffixes = c("_diary", "_lab"))## select: dropped 2 variables (Date LH surge, Menstrual Onset)## full_join: added 48 columns (VPN-CODE, Tagebuchcode, VPN-Zahl, Uhrzeit, exclude_luteal_too_long, …)## > rows only in x 62,307## > rows only in y 193## > matched rows 359## > ========## > rows total 62,859## select: dropped all variables## [1] 0## select: dropped 200 variables (session, created, modified, expired, browser, …)## # A tibble: 5 x 5

## description ended `IBL_Estradiol pg/ml` var_miss n_miss

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Missing values per variable 1672 62337 64009 64009

## 2 Missing values in 1 variables 1 0 1 60851

## 3 Missing values in 2 variables 0 0 2 1486

## 4 Missing values in 0 variables 1 1 0 336

## 5 Missing values in 1 variables 0 1 1 186s3_daily <- s3_daily %>%

full_join(

lab %>%

filter(!is.na(`Date LH surge`), exclude_luteal_too_long == 0) %>%

mutate(created_date = `Date LH surge`) %>%

select(session, short, created_date, `Date LH surge`),

by = c("session", "short", "created_date"), suffixes = c("_diary", "_lab"))## filter: removed 322 rows (58%), 230 rows remaining## mutate: changed 159 values (69%) of 'created_date' (0 new NA)## select: dropped 49 variables (VPN-CODE, Tagebuchcode, VPN-Zahl, Uhrzeit, exclude_luteal_too_long, …)## full_join: added one column (Date LH surge)## > rows only in x 62,684## > rows only in y 55## > matched rows 175## > ========## > rows total 62,914## filter: removed all rows (100%)# because of the typos in the lab session codes, we have to merge the long ones back on

xtabs(~ is.na(session) + is.na(`VPN-CODE`), data = s3_daily)## is.na(`VPN-CODE`)

## is.na(session) FALSE TRUE

## FALSE 552 62362## is.na(`VPN-CODE`)

## is.na(short) FALSE TRUE

## FALSE 552 62362## is.na(`VPN-CODE`)

## is.na(ended) FALSE TRUE

## FALSE 354 60833

## TRUE 198 1529## is.na(`Progesterone pg/ml`)

## is.na(ended) FALSE TRUE

## FALSE 329 60858

## TRUE 180 1547#

# diary %>% filter(!is.na(Age)) %>%

# select(short, Age, age, relationship_status, Relationship_status, `MEAN Größe`, `MEAN Gewicht`, height, weight) %>%

# group_by(short) %>%

# summarise_all(first) %>%

# distinct() %>%

# mutate(age_diff = abs(Age - age),

# height_diff = abs(height- `MEAN Größe`),

# weight_diff = abs(weight - `MEAN Gewicht`),

# rel_diff = abs(relationship_status - Relationship_status)) %>%

# arrange(age_diff) %>% ViewCenter sex hormones

We remove outliers that are more than 3 SD from the mean and center within groups (logged and non-logged).

outliers_to_missing <- function(x, sd_multiplier = 3) {

if_else(x > (mean(x, na.rm = T) + sd_multiplier * sd(x, na.rm = T)) |

x < (mean(x, na.rm = T) - sd_multiplier * sd(x, na.rm = T)),

NA_real_, x)

}

s3_daily <- s3_daily %>%

ungroup() %>%

mutate(

`Progesterone pg/ml` = outliers_to_missing(`Progesterone pg/ml`),

`Estradiol pg/ml` = outliers_to_missing(`Estradiol pg/ml`),

`IBL_Estradiol pg/ml` = outliers_to_missing(`IBL_Estradiol pg/ml`),

`Testosterone pg/ml` = outliers_to_missing(`Testosterone pg/ml`),

`Cortisol nmol/l` = outliers_to_missing(`Cortisol nmol/l`)

) %>%

group_by(session) %>%

mutate(

progesterone_mean = mean(`Progesterone pg/ml`, na.rm = T),

`progesterone_diff` = `Progesterone pg/ml` - progesterone_mean,

progesterone_log_mean = mean(log(`Progesterone pg/ml`), na.rm = T),

progesterone_log_diff = log(`Progesterone pg/ml`) - progesterone_log_mean,

estradiol_mean = mean(`Estradiol pg/ml`, na.rm = T),

estradiol_diff = `Estradiol pg/ml` - estradiol_mean,

estradiol_log_mean = mean(log(`Estradiol pg/ml`), na.rm = T),

estradiol_log_diff = log(`Estradiol pg/ml`) - estradiol_log_mean,

ibl_estradiol_mean = mean(`IBL_Estradiol pg/ml`, na.rm = T),

ibl_estradiol_diff = `IBL_Estradiol pg/ml` - ibl_estradiol_mean,

ibl_estradiol_log_mean = mean(log(`IBL_Estradiol pg/ml`), na.rm = T),

ibl_estradiol_log_diff = log(`IBL_Estradiol pg/ml`) - ibl_estradiol_log_mean,

testosterone_mean = mean(`Testosterone pg/ml`, na.rm = T),

testosterone_diff = `Testosterone pg/ml` - testosterone_mean,

testosterone_log_mean = mean(log(`Testosterone pg/ml`), na.rm = T),

testosterone_log_diff = log(`Testosterone pg/ml`) - testosterone_log_mean,

cortisol_mean = mean(`Cortisol nmol/l`, na.rm = T),

cortisol_diff = `Cortisol nmol/l` - cortisol_mean,

cortisol_log_mean = mean(log(`Cortisol nmol/l`), na.rm = T),

cortisol_log_diff = log(`Cortisol nmol/l`) - cortisol_log_mean

) %>%

ungroup()## ungroup: no grouping variables## mutate: changed 10 values (<1%) of 'Cortisol nmol/l' (10 new NA)## changed 5 values (<1%) of 'Testosterone pg/ml' (5 new NA)## changed 8 values (<1%) of 'Progesterone pg/ml' (8 new NA)## changed 4 values (<1%) of 'Estradiol pg/ml' (4 new NA)## changed 7 values (<1%) of 'IBL_Estradiol pg/ml' (7 new NA)## group_by: one grouping variable (session)## mutate (grouped): new variable 'progesterone_mean' with 139 unique values and 88% NA## new variable 'progesterone_diff' with 500 unique values and 99% NA## new variable 'progesterone_log_mean' with 139 unique values and 88% NA## new variable 'progesterone_log_diff' with 500 unique values and 99% NA## new variable 'estradiol_mean' with 75 unique values and 93% NA## new variable 'estradiol_diff' with 72 unique values and >99% NA## new variable 'estradiol_log_mean' with 75 unique values and 93% NA## new variable 'estradiol_log_diff' with 72 unique values and >99% NA## new variable 'ibl_estradiol_mean' with 139 unique values and 88% NA## new variable 'ibl_estradiol_diff' with 516 unique values and 99% NA## new variable 'ibl_estradiol_log_mean' with 139 unique values and 88% NA## new variable 'ibl_estradiol_log_diff' with 516 unique values and 99% NA## new variable 'testosterone_mean' with 139 unique values and 88% NA## new variable 'testosterone_diff' with 515 unique values and 99% NA## new variable 'testosterone_log_mean' with 139 unique values and 88% NA## new variable 'testosterone_log_diff' with 527 unique values and 99% NA## new variable 'cortisol_mean' with 139 unique values and 88% NA## new variable 'cortisol_diff' with 533 unique values and 99% NA## new variable 'cortisol_log_mean' with 139 unique values and 88% NA## new variable 'cortisol_log_diff' with 541 unique values and 99% NA## ungroup: no grouping variablesFertility awareness

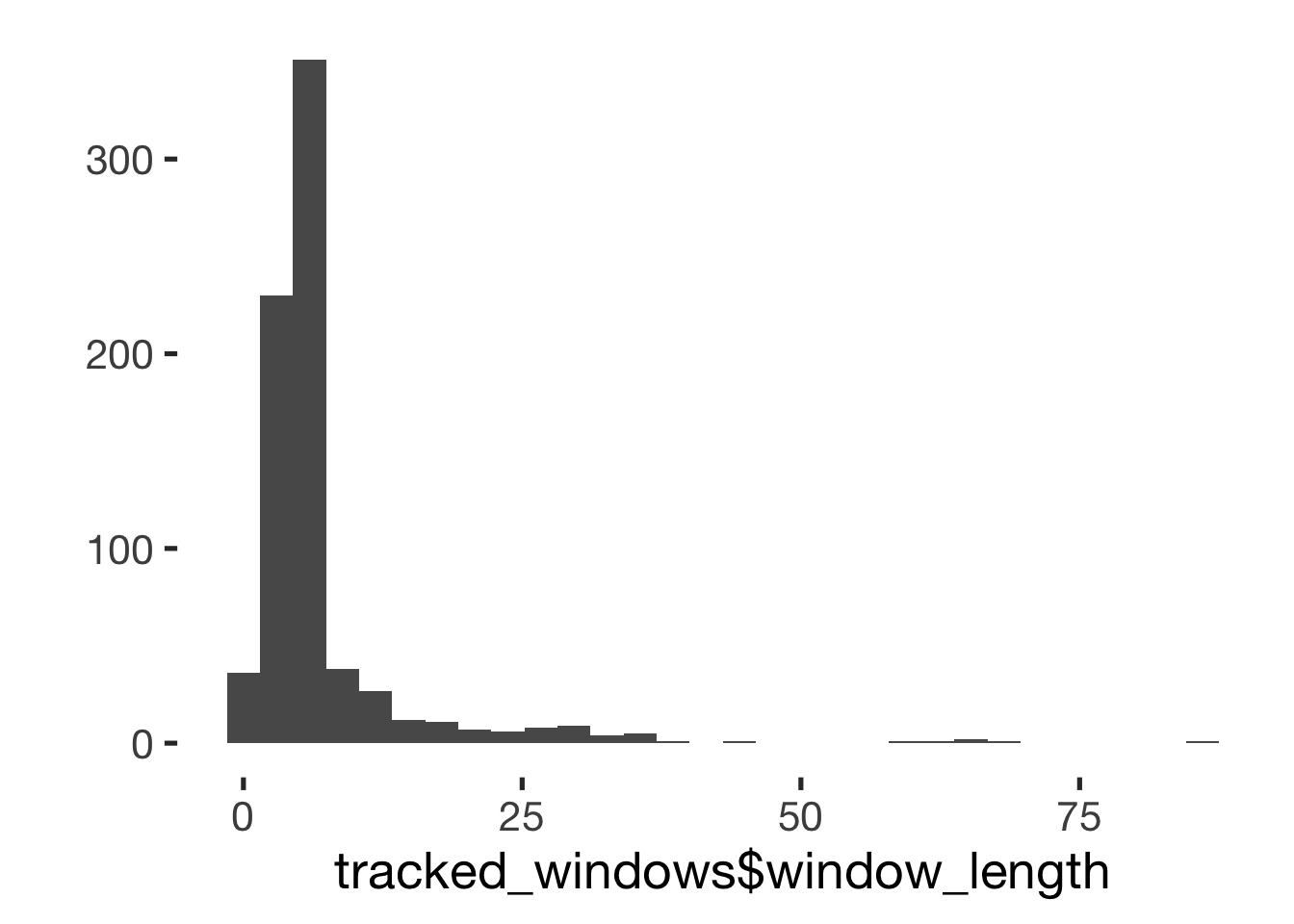

tracked_windows <- s4_followup %>% select(short, starts_with("aware_fertile"), -ends_with("block"), -aware_fertile_reason_unusual, -aware_fertile_effects) %>%

filter(aware_fertile_phases_number > 0) %>%

mutate_all(as.character) %>%

gather(cycle, date, -short, -aware_fertile_phases_number) %>%

tbl_df() %>%

mutate(cycle = str_sub(cycle, str_length("aware_fertile_") + 1)) %>%

separate(cycle, c("cycle", "startend")) %>%

mutate(date = as.Date(date)) %>%

spread(startend, date) %>%

mutate(window_length = end - start,

date_of_ovulation_awareness = end - days(1))## select: dropped 37 variables (session, created, modified, ended, expired, …)## filter: removed 867 rows (74%), 304 rows remaining## mutate_all: converted 'aware_fertile_phases_number' from double to character (0 new NA)## converted 'aware_fertile_1_start' from Date to character (0 new NA)## converted 'aware_fertile_1_end' from Date to character (0 new NA)## converted 'aware_fertile_2_start' from Date to character (0 new NA)## converted 'aware_fertile_2_end' from Date to character (0 new NA)## converted 'aware_fertile_3_start' from Date to character (0 new NA)## converted 'aware_fertile_3_end' from Date to character (0 new NA)## gather: reorganized (aware_fertile_1_start, aware_fertile_1_end, aware_fertile_2_start, aware_fertile_2_end, aware_fertile_3_start, …) into (cycle, date) [was 304x8, now 1824x4]## mutate: changed 1,824 values (100%) of 'cycle' (0 new NA)## mutate: converted 'date' from character to Date (0 new NA)## spread: reorganized (startend, date) into (end, start) [was 1824x5, now 912x5]## mutate: new variable 'window_length' with 44 unique values and 18% NA## new variable 'date_of_ovulation_awareness' with 243 unique values and 18% NAs3_daily <- s3_daily %>% left_join( tracked_windows %>%

select(short, window_length, date_of_ovulation_awareness) %>%

mutate(created_date = date_of_ovulation_awareness), by = c("short", "created_date"))## select: dropped 4 variables (aware_fertile_phases_number, cycle, end, start)## mutate: new variable 'created_date' with 243 unique values and 18% NA## left_join: added 2 columns (window_length, date_of_ovulation_awareness)## > rows only in x 62,369## > rows only in y ( 367)## > matched rows 545## > ========## > rows total 62,914Compute menstrual onsets

To compute menstrual onsets from the diary data, we have to clear a few hurdles:

- diaries could be filled out until 3 am (and later in special cases), but participants will tend to count backwards from the preceding day when asked when the last menstruation occurred

- we asked women only every ~3 days about menstruation (-> interpolate)

- women could report the same menstrual onset several times (-> use the report closest to the onset, more accurate)

- women reported a last menstrual onset in the demographic questionnaire preceding the diary and in the follow-up survey following the diary

- we need to count backward and forward from each menstrual onset

- we need to include the dates from the demographic and the follow-up questionnaire without overwriting more pertinent dates from the diary

- we want to “bridge gaps” between reports of menstruation that are at most 40 days wide (because wider gaps probably mean that there was something going on with the menstrual cycle such as a miscarriage, menopause, etc.)

Therefore we use a multi-step procedure:

- Collect unique menstrual onsets reported by each woman from pre-survey, diary, and post-survey

- Expand the onsets into time-series by participant.

- “Merge”/prefer reports closer to the onset when several different reports were made

- Count forward & backward.

- Assign cycle numbers.

- Merge on participant & created_date.

# step 1

menstrual_onsets = s3_daily %>%

group_by(session) %>%

arrange(created) %>%

mutate(

menstrual_onset_date = as.Date(menstrual_onset_date),

menstrual_onset_date_inferred = as.Date(ifelse(!is.na(menstrual_onset_date),

menstrual_onset_date, # if date was given, take it

ifelse(!is.na(menstrual_onset), # if days ago was given

created_date - days(menstrual_onset - 1), # subtract them from current date

as.Date(NA))

), origin = "1970-01-01")

) %>%

select(session, created_date, menstrual_onset_date_inferred) %>%

filter(!is.na(menstrual_onset_date_inferred)) %>%

unique()## group_by: one grouping variable (session)## mutate (grouped): changed 0 values (0%) of 'menstrual_onset_date' (0 new NA)## new variable 'menstrual_onset_date_inferred' with 276 unique values and 93% NA## select: dropped 223 variables (created, modified, ended, expired, browser, …)## filter (grouped): removed 58,763 rows (93%), 4,151 rows remaining## add in the menstrual onsets we got from the pre and post survey and the lab

lab_onsets <- lab %>% select(session, created_date, menstrual_onset_date_inferred = `Menstrual Onset`) %>%

mutate(menstrual_onset_date_inferred = as.Date(menstrual_onset_date_inferred)) %>%

filter(!is.na(menstrual_onset_date_inferred))## select: renamed one variable (menstrual_onset_date_inferred) and dropped 50 variables## mutate: converted 'menstrual_onset_date_inferred' from double to Date (0 new NA)## filter: no rows removedmons = menstrual_onsets %>%

select(session, created_date, menstrual_onset_date_inferred) %>%

mutate(date_origin = "diary") %>%

bind_rows(

s1_menstruation_start %>% mutate(date_origin = "demo"),

s5_hadmenstruation %>% mutate(date_origin = "followup"),

lab_onsets %>% mutate(date_origin = "lab")

) %>%

filter( !is.na(menstrual_onset_date_inferred)) %>%

arrange(session, menstrual_onset_date_inferred, created_date) %>%

unique() %>%

group_by(session) %>%

# step 3: prefer reports closer to event if they conflict

mutate(

onset_diff = abs( as.double( lag(menstrual_onset_date_inferred) - menstrual_onset_date_inferred, units = "days")), # was there a change compared to the last reported menstrual onset (first one gets NA)

menstrual_onset_date_inferred = if_else(onset_diff < 7, # if last date is known, but is slightly different from current date

as.Date(NA), # attribute it to memory, not extremely short cycle, use fresher date

menstrual_onset_date_inferred, # if it's a big difference, use the current date

menstrual_onset_date_inferred # use current date if last date not known/first onset

) # if no date is assigned today, keep it like that

) %>% # carry the last MO forward

# mutate(created_date = menstrual_onset_date_inferred) %>%

filter(!is.na(menstrual_onset_date_inferred))## select: no changes## mutate (grouped): new variable 'date_origin' with one unique value and 0% NA## mutate: new variable 'date_origin' with one unique value and 0% NA

## mutate: new variable 'date_origin' with one unique value and 0% NA

## mutate: new variable 'date_origin' with one unique value and 0% NA## filter (grouped): no rows removed## group_by: one grouping variable (session)## mutate (grouped): changed 2,604 values (40%) of 'menstrual_onset_date_inferred' (2604 new NA)## new variable 'onset_diff' with 104 unique values and 20% NA## filter (grouped): removed 2,604 rows (40%), 3,827 rows remaining## [1] 3827# mons %>% filter(created_date < menstrual_onset_date_inferred) %>% View

mons %>% group_by(session, created_date) %>% filter(n()> 1)## group_by: 2 grouping variables (session, created_date)## filter (grouped): removed 3,823 rows (>99%), 4 rows remaining## # A tibble: 4 x 5

## session created_date menstrual_onset_date_in… date_origin onset_diff

## <chr> <date> <date> <chr> <dbl>

## 1 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS09… 2016-10-17 2016-10-05 demo NA

## 2 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS09… 2016-10-17 2016-11-05 lab 31

## 3 jWdPyo_IKd4KJfTpZNMQ_1vdfAvhO3-82Y7qnm-nHRE6Q9… 2016-09-19 2016-09-02 demo NA

## 4 jWdPyo_IKd4KJfTpZNMQ_1vdfAvhO3-82Y7qnm-nHRE6Q9… 2016-09-19 2016-09-29 lab 27## distinct (grouped): removed 2 rows (<1%), 3,825 rows remaining## [1] 3825## group_by: one grouping variable (session)## filter (grouped): removed 3,293 rows (86%), 534 rows remaining## # A tibble: 534 x 5

## session created_date menstrual_onset_date_in… date_origin onset_diff

## <chr> <date> <date> <chr> <dbl>

## 1 _EQ98zBViYQhrRRoVXVag6gA3YYU_xkE0kQaCOhlsV24N… 2016-10-27 2016-10-05 demo NA

## 2 _EQ98zBViYQhrRRoVXVag6gA3YYU_xkE0kQaCOhlsV24N… 2016-11-03 2016-11-03 diary 29

## 3 _EQ98zBViYQhrRRoVXVag6gA3YYU_xkE0kQaCOhlsV24N… 2016-11-14 2016-12-01 lab 27

## 4 _EQ98zBViYQhrRRoVXVag6gA3YYU_xkE0kQaCOhlsV24N… 2017-01-04 2016-12-31 diary 30

## 5 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS0… 2016-10-17 2016-10-05 demo NA

## 6 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS0… 2016-10-17 2016-11-05 lab 31

## 7 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS0… 2016-11-17 2016-12-03 lab 28

## 8 -QE3RufkOaQJi-Gdcfrron_w1D-1zp9PBFbVmbBgbiNS0… 2017-01-02 2017-01-02 followup 30

## 9 10tuOUPxebpLQWXZk93kcys9TipSZaW1wqtXV30ynk8Ey… 2016-05-03 2016-04-02 demo NA

## 10 10tuOUPxebpLQWXZk93kcys9TipSZaW1wqtXV30ynk8Ey… 2016-05-09 2016-05-09 diary 37

## # … with 524 more rows# mons %>% filter(session %starts_with% "2x-juq") %>% View()

# now turn our dataset of menstrual onsets into full time series

menstrual_days = mons %>% distinct(session, created_date) %>%

arrange(session, created_date) %>%

# step 2 expand into time-series for participant

full_join(s3_daily %>% select(session, created_date), by = c("session", "created_date")) %>%

full_join(mons %>% mutate(created_date = menstrual_onset_date_inferred), by = c("session", "created_date")) %>%

mutate(date_origin = if_else(is.na(date_origin), "not_onset", date_origin)) %>%

group_by(session) %>%

complete(created_date = full_seq(created_date, period = 1)) %>%

mutate(date_origin = if_else(is.na(date_origin), "unobserved_day", date_origin)) %>%

arrange(created_date) %>%

distinct(session, created_date, menstrual_onset_date_inferred, .keep_all = TRUE) %>%

arrange(session, created_date, menstrual_onset_date_inferred) %>%

distinct(session, created_date, .keep_all = TRUE)## distinct (grouped): removed 2 rows (<1%), 3,825 rows remaining## select: dropped 223 variables (created, modified, ended, expired, browser, …)## full_join: added no columns## > rows only in x 1,576## > rows only in y 60,665## > matched rows 2,249## > ========## > rows total 64,490## mutate (grouped): changed 3,377 values (88%) of 'created_date' (0 new NA)## full_join: added 3 columns (menstrual_onset_date_inferred, date_origin, onset_diff)## > rows only in x 62,654## > rows only in y 1,991## > matched rows 1,836## > ========## > rows total 66,481## mutate (grouped): changed 62,654 values (94%) of 'date_origin' (62654 fewer NA)## group_by: one grouping variable (session)## mutate (grouped): changed 44,337 values (40%) of 'date_origin' (44337 fewer NA)## distinct (grouped): no rows removed

## distinct (grouped): no rows removed##

## demo diary followup lab not_onset unobserved_day

## 1243 1884 417 283 62654 44337menstrual_days %>% filter(date_origin != "filledin") %>% group_by(session) %>% summarise(n = n()) %>% summarise(mean(n))## filter (grouped): no rows removed## group_by: one grouping variable (session)## summarise: now 1,545 rows and 2 columns, ungrouped## summarise: now one row and one column, ungrouped## # A tibble: 1 x 1

## `mean(n)`

## <dbl>

## 1 71.7## group_by: one grouping variable (session)## summarise: now 1,545 rows and 2 columns, ungrouped## summarise: now one row and one column, ungrouped## # A tibble: 1 x 1

## `mean(n)`

## <dbl>

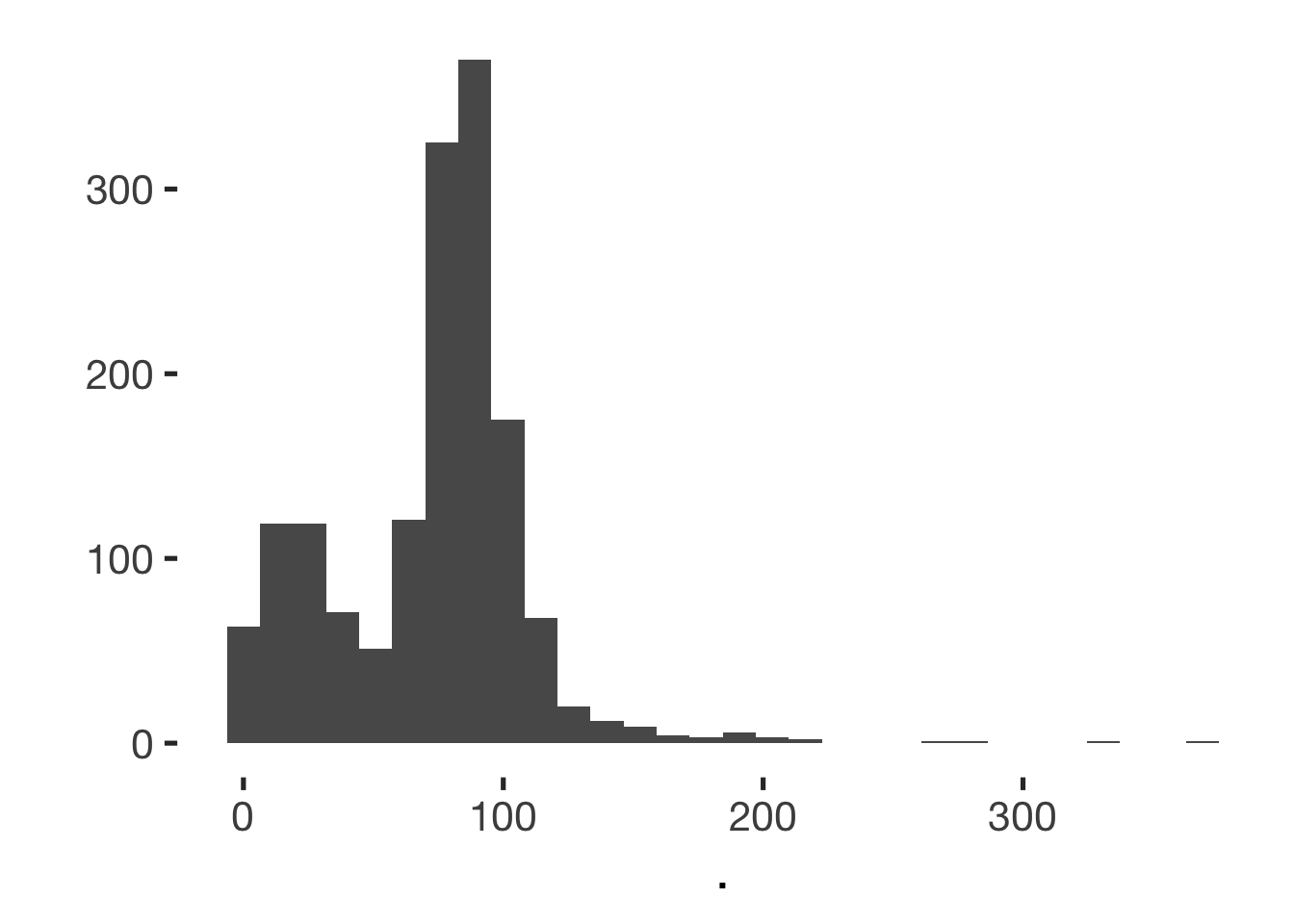

## 1 71.7## group_by: one grouping variable (session)## summarise: now 1,545 rows and 2 columns, ungrouped## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

menstrual_days %>% drop_na(session, created_date) %>%

group_by(session, created_date) %>% filter(n()>1) %>% nrow() %>% { . == 0} %>% stopifnot()## drop_na (grouped): no rows removed## group_by: 2 grouping variables (session, created_date)## filter (grouped): removed all rows (100%)# menstrual_onsets %>% filter(session == "_2efChMgmsXAYmalYlRY9epxS_wse0ytWYttV6tLi6FUd2FRENkr9JgVnmtzaMCs")

# mons %>% filter(session %starts_with% "_2sufSUfIWjNXg6xfRzJaCid9jzkY") %>% View()

# menstrual_onsets %>% filter(session %starts_with% "_2sufSUfIWjNXg6xfRzJaCid9jzkY") %>% View()

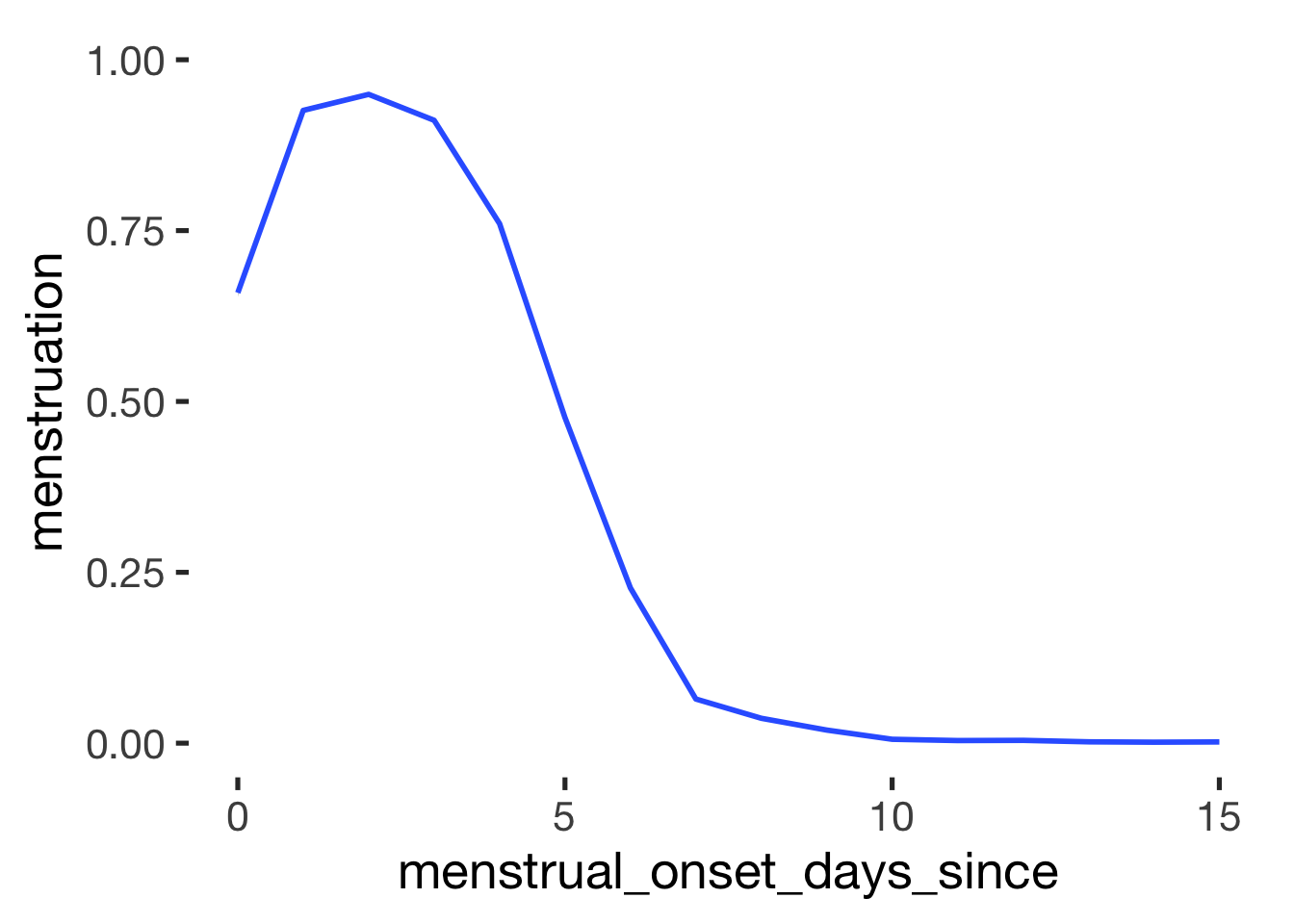

menstrual_days = menstrual_days %>%

group_by(session) %>%

mutate(

# carry the last observation (the last observed menstrual onset) backward/forward (within person), but we don't do this if we'd bridge more than 40 days this way

# first we carry it backward (because reporting is retrospective)

next_menstrual_onset = rcamisc::repeat_last(menstrual_onset_date_inferred, forward = FALSE),

# then we carry it forward

last_menstrual_onset = rcamisc::repeat_last(menstrual_onset_date_inferred),

# in the next cycle, count to the next onset, not the last

next_menstrual_onset = if_else(next_menstrual_onset == last_menstrual_onset,

lead(next_menstrual_onset),

next_menstrual_onset),

# calculate the diff to current date

menstrual_onset_days_until = as.numeric(created_date - next_menstrual_onset),

menstrual_onset_days_since = as.numeric(created_date - last_menstrual_onset)

)## group_by: one grouping variable (session)## mutate (grouped): new variable 'next_menstrual_onset' with 307 unique values and 29% NA## new variable 'last_menstrual_onset' with 338 unique values and 13% NA## new variable 'menstrual_onset_days_until' with 293 unique values and 29% NA## new variable 'menstrual_onset_days_since' with 293 unique values and 13% NAmenstrual_days %>% drop_na(session, created_date) %>%

group_by(session, created_date) %>% filter(n()>1) %>% nrow() %>% { . == 0} %>% stopifnot()## drop_na (grouped): no rows removed## group_by: 2 grouping variables (session, created_date)## filter (grouped): removed all rows (100%)avg_cycle_lengths = menstrual_days %>%

select(session, last_menstrual_onset, next_menstrual_onset) %>%

mutate(next_menstrual_onset_if_no_last = if_else(is.na(last_menstrual_onset), next_menstrual_onset, as.Date(NA_character_))) %>%

arrange(session, next_menstrual_onset_if_no_last, last_menstrual_onset) %>%

select(-next_menstrual_onset) %>%

distinct(session, last_menstrual_onset, next_menstrual_onset_if_no_last, .keep_all = TRUE) %>%

group_by(session) %>%

mutate(

number_of_cycles = n(),

cycle_nr = row_number(),

cycle_length = as.double(lead(last_menstrual_onset) - last_menstrual_onset, units = "days"),

cycle_nr_fully_observed = sum(!is.na(cycle_length)),

mean_cycle_length_diary = mean(cycle_length, na.rm = TRUE),

median_cycle_length_diary = median(cycle_length, na.rm = TRUE)) %>%

filter(!is.na(last_menstrual_onset) | !is.na(next_menstrual_onset_if_no_last))## select: dropped 6 variables (created_date, menstrual_onset_date_inferred, date_origin, onset_diff, menstrual_onset_days_until, …)## mutate (grouped): new variable 'next_menstrual_onset_if_no_last' with one unique value and 100% NA## select: dropped one variable (next_menstrual_onset)## distinct (grouped): removed 106,696 rows (96%), 4,122 rows remaining## group_by: one grouping variable (session)## mutate (grouped): new variable 'number_of_cycles' with 7 unique values and 0% NA## new variable 'cycle_nr' with 7 unique values and 0% NA## new variable 'cycle_length' with 97 unique values and 39% NA## new variable 'cycle_nr_fully_observed' with 6 unique values and 0% NA## new variable 'mean_cycle_length_diary' with 176 unique values and 14% NA## new variable 'median_cycle_length_diary' with 110 unique values and 14% NA## filter (grouped): removed 295 rows (7%), 3,827 rows remaining# avg_cycle_lengths %>% filter(session %starts_with% "_sqtMf5") %>% View("cycles")

table(is.na(avg_cycle_lengths$cycle_nr))##

## FALSE

## 3827# menstrual_onsets %>% filter(session %starts_with% "_2sufSUfIWjNXg6xfRzJaCid9jzkY") %>% View()

gaps <- s3_daily %>% filter(session %starts_with% "--_MgFd") %>% tbl_df() %>% pull(created_date) %>% diff() %>% as.numeric(.)## filter: removed 62,864 rows (>99%), 50 rows remainingstopifnot(!all(gaps == 1))

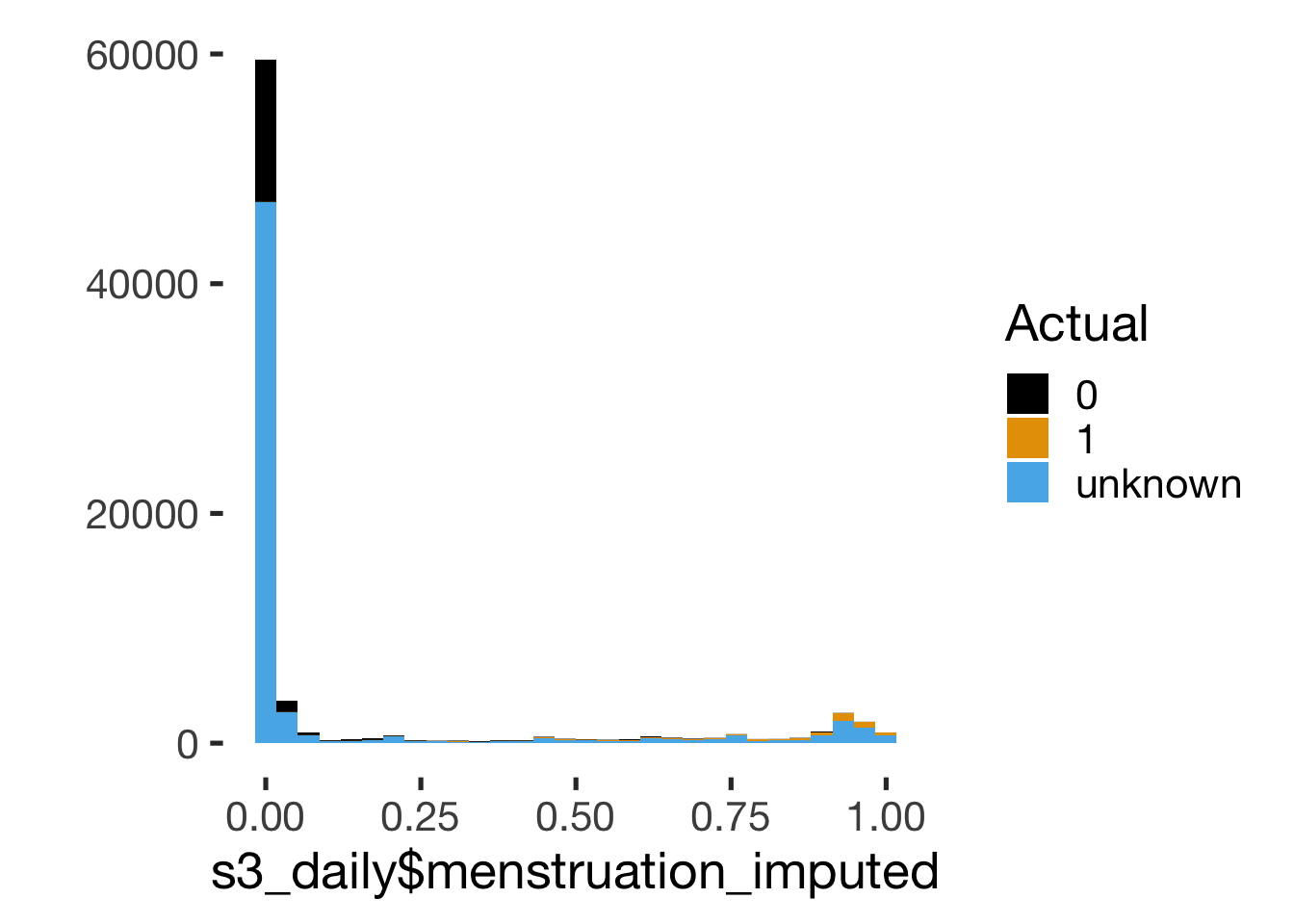

s3_daily <- s3_daily %>%

group_by(session) %>%

complete(created_date = full_seq(created_date, period = 1)) %>% # include the gap days in the diary (happens by default in formr, this just to ensure)

ungroup() %>%

mutate(diary_day_observation = case_when(

is.na(created) ~ "interpolated",

is.na(modified) ~ "not_answered",

!is.na(expired) ~ "started_not_finished",

is.na(ended) ~ "not_finished",

!is.na(ended) ~ "finished"

)) %>%

left_join(menstrual_days %>%

select(session, created_date, next_menstrual_onset, last_menstrual_onset, menstrual_onset_days_until, menstrual_onset_days_since, date_origin),

by = c("session", "created_date")

) %>%

mutate(

menstruation_today = if_else(menstruation_since_last_entry == 1, as.numeric(menstruation_today), 0),

menstruation_labelled = factor(if_else(! is.na(menstruation_today),

if_else(menstruation_today == 1, "yes", "no"),

if_else(menstrual_onset_days_since <= 5,

if_else(menstrual_onset_days_since == 0, "yes", "probably", "no"),

"no", "no")),

levels = c('yes', 'probably', 'no'))

) %>%

mutate(next_menstrual_onset_if_no_last = if_else(is.na(last_menstrual_onset), next_menstrual_onset, as.Date(NA_character_)))## group_by: one grouping variable (session)## ungroup: no grouping variables## mutate: new variable 'diary_day_observation' with 5 unique values and 0% NA## select: dropped 2 variables (menstrual_onset_date_inferred, onset_diff)## left_join: added 5 columns (next_menstrual_onset, last_menstrual_onset, menstrual_onset_days_until, menstrual_onset_days_since, date_origin)## > rows only in x 0## > rows only in y (31,569)## > matched rows 79,249## > ========## > rows total 79,249## mutate: changed 13,848 values (17%) of 'menstruation_today' (13848 fewer NA)## new variable 'menstruation_labelled' with 3 unique values and 0% NA## mutate: new variable 'next_menstrual_onset_if_no_last' with one unique value and 100% NAgaps <- s3_daily %>% filter(session %starts_with% "--_MgFd") %>% tbl_df() %>% pull(created_date) %>% diff() %>% as.numeric(.)## filter: removed 79,182 rows (>99%), 67 rows remainingstopifnot(all(gaps == 1))

s3_daily <- s3_daily %>%

group_by(session) %>%

mutate(first_diary_day = first(na.omit(first_diary_day)),

day_number = round(as.numeric(as.Date(created_date) - first_diary_day, unit = 'days'))) %>%

ungroup()## group_by: one grouping variable (session)## mutate (grouped): changed 16,583 values (21%) of 'first_diary_day' (16583 fewer NA)## new variable 'day_number' with 408 unique values and 0% NA## ungroup: no grouping variables# s3_daily %>% filter(is.na(day_number)) %>% select(session, short, created_date, ended, first_diary_day) %>% arrange(short, created_date) %>% View

table(s3_daily$day_number, exclude = NULL)##

## -263 -262 -261 -260 -259 -258 -257 -256 -255 -254 -253 -252 -251 -250 -249 -248 -247 -246 -245 -244 -243 -242

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -241 -240 -239 -238 -237 -236 -235 -234 -233 -232 -231 -230 -229 -228 -227 -226 -225 -224 -223 -222 -221 -220

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -219 -218 -217 -216 -215 -214 -213 -212 -211 -210 -209 -208 -207 -206 -205 -204 -203 -202 -201 -200 -199 -198

## 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2

## -197 -196 -195 -194 -193 -192 -191 -190 -189 -188 -187 -186 -185 -184 -183 -182 -181 -180 -179 -178 -177 -176

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -175 -174 -173 -172 -171 -170 -169 -168 -167 -166 -165 -164 -163 -162 -161 -160 -159 -158 -157 -156 -155 -154

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -153 -152 -151 -150 -149 -148 -147 -146 -145 -144 -143 -142 -141 -140 -139 -138 -137 -136 -135 -134 -133 -132

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -131 -130 -129 -128 -127 -126 -125 -124 -123 -122 -121 -120 -119 -118 -117 -116 -115 -114 -113 -112 -111 -110

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -109 -108 -107 -106 -105 -104 -103 -102 -101 -100 -99 -98 -97 -96 -95 -94 -93 -92 -91 -90 -89 -88

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -87 -86 -85 -84 -83 -82 -81 -80 -79 -78 -77 -76 -75 -74 -73 -72 -71 -70 -69 -68 -67 -66

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 4 4 4 4 4 4 4 4

## -65 -64 -63 -62 -61 -60 -59 -58 -57 -56 -55 -54 -53 -52 -51 -50 -49 -48 -47 -46 -45 -44

## 4 4 4 4 4 4 5 5 5 5 5 5 5 5 6 6 6 6 6 6 6 7

## -43 -42 -41 -40 -39 -38 -37 -36 -35 -34 -33 -32 -31 -30 -29 -28 -27 -26 -25 -24 -23 -22

## 7 8 8 8 9 10 11 11 11 11 11 11 11 12 13 13 13 13 14 14 15 17

## -21 -20 -19 -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1 0

## 17 17 18 20 20 20 21 22 22 23 24 24 25 25 27 28 28 32 33 34 40 1373

## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

## 1339 1326 1314 1310 1303 1294 1288 1284 1277 1266 1256 1250 1242 1232 1227 1222 1212 1205 1199 1189 1175 1166

## 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44

## 1162 1155 1150 1143 1138 1133 1131 1126 1123 1113 1110 1107 1104 1099 1094 1090 1084 1080 1075 1068 1065 1056

## 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66

## 1049 1045 1040 1035 1030 1027 1025 1019 1014 1006 1001 996 989 981 974 969 964 953 940 932 909 873

## 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88

## 838 737 575 88 30 29 29 28 26 25 24 22 22 22 20 20 20 18 16 14 13 13

## 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110

## 13 13 12 11 11 10 8 8 8 7 7 7 7 7 7 7 7 6 6 6 5 5

## 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132

## 5 5 4 3 3 3 3 3 3 3 3 2 2 2 2 2 2 2 2 2 2 2

## 133 134 135 136 137 138 139 140 141 142 143 144

## 2 2 1 1 1 1 1 1 1 1 1 1## drop_na: removed 18,062 rows (23%), 61,187 rows remaining##

## 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21

## 1324 1137 1132 1104 1121 1102 1092 1095 1057 1035 1027 1038 1028 1009 1001 982 975 997 959 940 965 933

## 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43

## 935 893 903 911 886 893 878 891 854 872 857 863 859 839 834 798 819 808 826 814 809 775

## 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65

## 784 777 781 786 793 767 756 761 749 761 758 767 740 733 736 739 739 737 746 734 718 718

## 66 67 68 69 70

## 706 723 703 549 56## drop_na: removed 18,062 rows (23%), 61,187 rows remainingstopifnot(s3_daily %>% drop_na(session, day_number) %>% group_by(session, day_number) %>% filter(n() > 1) %>% nrow() == 0)## drop_na: no rows removed## group_by: 2 grouping variables (session, day_number)## filter (grouped): removed all rows (100%)gaps <- s3_daily %>%

drop_na(session) %>%

group_by(session) %>%

summarise(no_gaps = all(as.numeric(diff(created_date)) == 1),

n = n(),

range = paste(range(day_number), collapse = '-'))## drop_na: no rows removed## group_by: one grouping variable (session)## summarise: now 1,373 rows and 4 columns, ungroupedEstimate day of ovulation

# s3_daily %>% filter(short == "_sqtMf5") %>% select(short, created_date, ended, menstruation_labelled, next_menstrual_onset_if_no_last, last_menstrual_onset) %>% View("days")

s3_daily <- s3_daily %>%

left_join(avg_cycle_lengths, by = c("session", "last_menstrual_onset", "next_menstrual_onset_if_no_last")) %>%

left_join(s1_demo %>% select(session, menstruation_length), by = 'session') %>%

mutate(

next_menstrual_onset_inferred = last_menstrual_onset + days(menstruation_length),

RCD_inferred = as.numeric(created_date - next_menstrual_onset_inferred)

)## left_join: added 6 columns (number_of_cycles, cycle_nr, cycle_length, cycle_nr_fully_observed, mean_cycle_length_diary, …)## > rows only in x 13,745## > rows only in y ( 670)## > matched rows 65,504## > ========## > rows total 79,249## select: dropped 103 variables (created, modified, ended, expired, info_study, …)## left_join: added one column (menstruation_length)## > rows only in x 0## > rows only in y ( 287)## > matched rows 79,249## > ========## > rows total 79,249## mutate: new variable 'next_menstrual_onset_inferred' with 304 unique values and 18% NA## new variable 'RCD_inferred' with 186 unique values and 18% NAs3_daily %>% filter(short == "_sqtMf5", created_date == "2016-08-25") %>% pull(cycle_nr) %>% is.na() %>% isFALSE() %>% stopifnot()## filter: removed 79,248 rows (>99%), one row remaining## is.na(s3_daily$cycle_nr)

## s3_daily$diary_day_observation FALSE TRUE

## finished 51225 9962

## interpolated 13074 3509

## not_answered 501 122

## not_finished 5 0

## started_not_finished 699 152s3_daily <- s3_daily %>%

group_by(session, cycle_nr) %>%

mutate(

luteal_BC = if_else(menstrual_onset_days_until >= -15, 1, 0),

follicular_FC = if_else(menstrual_onset_days_since <= 15, 1, 0)

) %>%

mutate(

day_lh_surge = if_else(created_date == `Date LH surge`, 1, 0),

day_of_ovulation = if_else(menstrual_onset_days_until == -15, 1, 0),

day_of_ovulation_inferred = if_else(RCD_inferred == -15, 1, 0),

day_of_ovulation_forward_counted = if_else(menstrual_onset_days_since == 14, 1, 0),

date_of_ovulation_BC = min(if_else(day_of_ovulation == 1, created_date, structure(NA_real_, class="Date")), na.rm = TRUE),

date_of_ovulation_inferred = min(if_else(day_of_ovulation_inferred == 1, created_date, structure(NA_real_, class="Date")), na.rm = TRUE),

date_of_ovulation_forward_counted = min(if_else(day_of_ovulation_forward_counted == 1, created_date, structure(NA_real_, class="Date")), na.rm = TRUE),

date_of_ovulation_LH = min(`Date LH surge` + days(1), na.rm = T),

DRLH = as.numeric(created_date - date_of_ovulation_LH),

DRLH = if_else(between(DRLH, -15, 15), DRLH, NA_real_)

) %>%

ungroup() %>%

mutate_at(vars(starts_with("date_of_ovulation_")), funs(if_else(is.infinite(.), as.Date(NA_character_),.)))## group_by: 2 grouping variables (session, cycle_nr)## mutate (grouped): new variable 'luteal_BC' with 3 unique values and 30% NA## new variable 'follicular_FC' with 3 unique values and 17% NA## mutate (grouped): new variable 'day_lh_surge' with 2 unique values and >99% NA## new variable 'day_of_ovulation' with 3 unique values and 30% NA## new variable 'day_of_ovulation_inferred' with 3 unique values and 18% NA## new variable 'day_of_ovulation_forward_counted' with 3 unique values and 17% NA## new variable 'date_of_ovulation_BC' with 275 unique values and 0% NA## new variable 'date_of_ovulation_inferred' with 270 unique values and 0% NA## new variable 'date_of_ovulation_forward_counted' with 271 unique values and 0% NA## new variable 'date_of_ovulation_LH' with 148 unique values and 0% NA## new variable 'DRLH' with 32 unique values and 93% NA## ungroup: no grouping variables## mutate_at: changed 29,892 values (38%) of 'date_of_ovulation_BC' (29892 new NA)## changed 23,173 values (29%) of 'date_of_ovulation_inferred' (23173 new NA)## changed 22,763 values (29%) of 'date_of_ovulation_forward_counted' (22763 new NA)## changed 73,118 values (92%) of 'date_of_ovulation_LH' (73118 new NA)s3_daily <- s3_daily %>%

group_by(short, cycle_nr) %>%

mutate(date_of_ovulation_awareness_nr = n_nonmissing(date_of_ovulation_awareness),

date_of_ovulation_awareness = if_else(date_of_ovulation_awareness_nr == 1 &

window_length > 3 & window_length < 9,

first(na.omit(date_of_ovulation_awareness)), as.Date(NA_character_))) %>%

mutate(fertile_awareness = case_when(

is.na(date_of_ovulation_awareness) ~ NA_real_,

created_date < (date_of_ovulation_awareness + 1 - window_length) ~ 0,

created_date > (date_of_ovulation_awareness + 1) ~ 0,

TRUE ~ 1

)) %>%

ungroup()## group_by: 2 grouping variables (short, cycle_nr)## mutate (grouped): changed 238 values (<1%) of 'date_of_ovulation_awareness' (238 new NA)## new variable 'date_of_ovulation_awareness_nr' with 4 unique values and 0% NA## mutate (grouped): new variable 'fertile_awareness' with 2 unique values and >99% NA## ungroup: no grouping variables##

## FALSE TRUE

## 78942 307##

## FALSE TRUE

## 634 118## Don't know how to automatically pick scale for object of type difftime. Defaulting to continuous.## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

s3_daily <- s3_daily %>%

left_join(s4_followup %>% select(session, follicular_phase_length, luteal_phase_length), by = 'session') %>%

mutate(

date_of_ovulation_avg_follicular = last_menstrual_onset + days(follicular_phase_length),

date_of_ovulation_avg_luteal = next_menstrual_onset - days(luteal_phase_length + 1),

date_of_ovulation_avg_luteal_inferred = next_menstrual_onset_inferred - days(luteal_phase_length)

) %>% select(

-luteal_phase_length, -follicular_phase_length

)## select: dropped 42 variables (created, modified, ended, expired, hypothesis_guess, …)## left_join: added 2 columns (follicular_phase_length, luteal_phase_length)## > rows only in x 4,500## > rows only in y ( 9)## > matched rows 74,749## > ========## > rows total 79,249## mutate: new variable 'date_of_ovulation_avg_follicular' with 222 unique values and 84% NA## new variable 'date_of_ovulation_avg_luteal' with 212 unique values and 87% NA## new variable 'date_of_ovulation_avg_luteal_inferred' with 213 unique values and 85% NA## select: dropped 2 variables (follicular_phase_length, luteal_phase_length)s3_daily %>%

group_by(short) %>%

summarise(surges = n_distinct(`Date LH surge`, na.rm = T)) %>%

filter(surges > 0) %>%

pull(surges) %>%

table()## group_by: one grouping variable (short)## summarise: now 1,374 rows and 2 columns, ungrouped## filter: removed 1,252 rows (91%), 122 rows remaining## .

## 1 2

## 14 108# s3_daily %>%

# drop_na(session, cycle_nr) %>%

# group_by(short) %>%

# filter(4 == n_distinct(`Date LH surge`, na.rm = T)) %>% select(short, ended, DRLH, day_number, cycle_nr, created_date,menstrual_onset_days_until, menstrual_onset_days_since, `Date LH surge`) %>% View()

# s3_daily %>%

# drop_na(session, cycle_nr) %>%

# group_by(short, cycle_nr) %>%

# filter(2 == n_distinct(`Date LH surge`, na.rm = T)) %>% select(short, ended, day_number, DRLH, cycle_nr, created_date,menstrual_onset_days_until, menstrual_onset_days_since, `Date LH surge`) %>% View()

# one case of a woman who reported two surges (close together in one cycle, we use the first surge)

s3_daily %>%

group_by(short, cycle_nr) %>%

summarise(surges = n_distinct(`Date LH surge`, na.rm = T)) %>%

filter(surges > 0) %>%

pull(surges) %>%

table()## group_by: 2 grouping variables (short, cycle_nr)## summarise: now 3,441 rows and 3 columns, one group variable remaining (short)## filter (grouped): removed 3,211 rows (93%), 230 rows remaining## .

## 1

## 230stopifnot(s3_daily %>% drop_na(session, created) %>%

group_by(session, created) %>% filter(n()>1) %>% nrow() == 0)## drop_na: removed 16,583 rows (21%), 62,666 rows remaining## group_by: 2 grouping variables (session, created)## filter (grouped): removed all rows (100%)# s3_daily %>% filter(session %starts_with% "_2sufSUfIWjNXg6xfRzJaCid9jzkY") %>% select(created_date, menstrual_onset, menstrual_onset_date, menstrual_onset_days_until, menstrual_onset_days_since) %>% View()

# s3_daily %>% filter(session %starts_with% "2x-juq") %>% select(created_date, menstrual_onset, menstrual_onset_date, menstrual_onset_days_until, menstrual_onset_days_since) %>% View()

s3_daily %>% filter(is.na(cycle_nr), !is.na(next_menstrual_onset)) %>% select(short, cycle_nr, last_menstrual_onset, next_menstrual_onset) %>% nrow() %>% { . == 0 } %>% stopifnot()## filter: removed all rows (100%)## select: dropped 255 variables (session, created_date, created, modified, ended, …)s3_daily %>% filter(is.na(cycle_nr), !is.na(last_menstrual_onset)) %>% select(short, cycle_nr, last_menstrual_onset, next_menstrual_onset) %>% nrow() %>% { . == 0 } %>% stopifnot()## filter: removed all rows (100%)

## select: dropped 255 variables (session, created_date, created, modified, ended, …)# There are some 56 days across women for whom we have a last menstrual onset, but no cycle info. This happens when a last menstrual onset was reported that was more than 40 days before the beginning of the diary

crosstabs(~ is.na(cycle_nr) + is.na(menstruation_length), s3_daily)## is.na(menstruation_length)

## is.na(cycle_nr) FALSE TRUE

## FALSE 65339 165

## TRUE 630 13115crosstabs(~ is.na(cycle_nr) + is.na(menstruation_length), s3_daily %>% filter(diary_day_observation == "finished"))## filter: removed 18,062 rows (23%), 61,187 rows remaining## is.na(menstruation_length)

## is.na(cycle_nr) FALSE TRUE

## FALSE 51137 88

## TRUE 150 9812Estimate fertile window probability

s3_daily = s3_daily %>%

mutate(

FCD = menstrual_onset_days_since + 1,

RCD = menstrual_onset_days_until,

DAL = created_date - date_of_ovulation_avg_luteal,

RCD_squished = if_else(

cycle_length - FCD < 14,

29 - (cycle_length - FCD),

((FCD/ (cycle_length - 14) ) * 15)),

RCD_squished = if_else(RCD_squished < 1, 1, RCD_squished),

RCD_squished = if_else(RCD < -40, NA_real_, RCD_squished) - 30,

RCD_squished_rounded = round(RCD_squished),

RCD_inferred_squished = if_else(

FCD > menstruation_length,

NA_real_,

if_else(

as.numeric(menstruation_length) - FCD < 14,

29 - (as.numeric(menstruation_length) - FCD),

round((FCD/ (as.numeric(menstruation_length) - 14) ) * 15))

),

RCD_inferred_squished = if_else(RCD_inferred_squished < 1, 1, RCD_inferred_squished),

RCD_inferred_squished = if_else(RCD_inferred < -40, NA_real_, RCD_inferred_squished) - 30,

# add 15 days to the reverse cycle days to arrive at the estimated day of ovulation

RCD_rel_to_ovulation = RCD + 15,

RCD_fab = RCD_squished

)## mutate: new variable 'FCD' with 165 unique values and 17% NA## new variable 'RCD' with 239 unique values and 30% NA## new variable 'DAL' with 117 unique values and 87% NA## new variable 'RCD_squished' with 778 unique values and 33% NA## new variable 'RCD_squished_rounded' with 30 unique values and 33% NA## new variable 'RCD_inferred_squished' with 30 unique values and 26% NA## new variable 'RCD_rel_to_ovulation' with 239 unique values and 30% NA## new variable 'RCD_fab' with 778 unique values and 33% NA##

## -29 -28 -27 -26 -25 -24 -23 -22 -21 -20 -19 -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8

## 2055 2103 1957 2044 2108 2076 1247 2569 2066 2182 2024 2233 1906 2207 2170 2145 2146 2159 2164 2163 2143 2116

## -7 -6 -5 -4 -3 -2 -1

## 2068 2029 1958 1873 1732 1533 1286##

## -29 -28.9285714285714 -28.8888888888889 -28.8461538461538 -28.8 -28.75

## 579 300 3 192 8 109

## -28.695652173913 -28.6363636363636 -28.5714285714286 -28.5 -28.4210526315789 -28.3928571428571

## 15 123 28 89 51 1

## -28.3333333333333 -28.2692307692308 -28.2352941176471 -28.2 -28.125 -28.0434782608696

## 81 8 95 8 157 16

## -28 -27.9545454545455 -27.9310344827586 -27.8571428571429 -27.7777777777778 -27.75

## 143 23 2 358 3 32

## -27.6923076923077 -27.6315789473684 -27.6 -27.5 -27.4137931034483 -27.3913043478261

## 196 51 8 180 2 16

## -27.3529411764706 -27.3214285714286 -27.2727272727273 -27.2222222222222 -27.1875 -27.1428571428571

## 95 1 127 3 127 30

## -27.1153846153846 -27 -26.8965517241379 -26.875 -26.8421052631579 -26.7857142857143

## 8 253 2 6 51 317

## -26.7391304347826 -26.71875 -26.6666666666667 -26.5909090909091 -26.5384615384615 -26.5

## 16 4 81 23 200 6

## -26.4705882352941 -26.4285714285714 -26.4 -26.3793103448276 -26.3636363636364 -26.25

## 95 33 9 2 2 313

## -26.1111111111111 -26.0869565217391 -26.0526315789474 -26.0294117647059 -26 -25.9615384615385

## 4 16 54 6 155 8

## -25.9090909090909 -25.8620689655172 -25.8333333333333 -25.8 -25.78125 -25.7142857142857

## 134 2 59 9 5 379

## -25.625 -25.5882352941176 -25.5555555555556 -25.5 -25.4545454545455 -25.4347826086957

## 7 107 4 97 2 16

## -25.4166666666667 -25.3846153846154 -25.3448275862069 -25.3125 -25.2857142857143 -25.2631578947368

## 5 202 2 139 3 54

## -25.2272727272727 -25.2 -25.1785714285714 -25.1612903225806 -25.1470588235294 -25.1351351351351

## 25 10 1 1 6 7

## -25 -24.8684210526316 -24.8571428571429 -24.84375 -24.8275862068966 -24.8076923076923

## 413 2 3 5 2 8

## -24.7826086956522 -24.75 -24.7297297297297 -24.7058823529412 -24.6774193548387 -24.6428571428571

## 16 32 7 110 1 327

## -24.6153846153846 -24.6 -24.5833333333333 -24.5454545454545 -24.5 -24.4736842105263

## 2 10 4 138 6 56

## -24.4444444444444 -24.4285714285714 -24.375 -24.3243243243243 -24.3103448275862 -24.2857142857143

## 4 4 176 8 2 33

## -24.2647058823529 -24.2307692307692 -24.1935483870968 -24.1666666666667 -24.1463414634146 -24.1304347826087

## 6 209 1 68 4 16

## -24.1071428571429 -24.0909090909091 -24.0789473684211 -24 -23.9285714285714 -23.9189189189189

## 1 3 2 280 7 8

## -23.90625 -23.8888888888889 -23.8636363636364 -23.8461538461538 -23.8235294117647 -23.7931034482759

## 5 4 25 2 115 2

## -23.780487804878 -23.75 -23.7209302325581 -23.7096774193548 -23.6842105263158 -23.6538461538462

## 4 123 5 1 57 7

## -23.6363636363636 -23.625 -23.5714285714286 -23.5135135135135 -23.5 -23.4782608695652

## 5 3 397 8 7 16

## -23.4615384615385 -23.4375 -23.4146341463415 -23.4 -23.3823529411765 -23.3720930232558

## 2 141 4 11 6 5

## -23.3333333333333 -23.2894736842105 -23.2758620689655 -23.25 -23.2258064516129 -23.2142857142857

## 99 3 2 39 1 7

## -23.1818181818182 -23.1521739130435 -23.1428571428571 -23.125 -23.1081081081081 -23.0769230769231

## 140 4 4 8 8 213

## -23.0487804878049 -23.0357142857143 -23.0232558139535 -23 -22.9787234042553 -22.96875

## 4 1 5 169 1 5

## -22.9411764705882 -22.9166666666667 -22.8947368421053 -22.875 -22.8571428571429 -22.8260869565217

## 117 4 58 3 41 21

## -22.8125 -22.8 -22.7777777777778 -22.7586206896552 -22.741935483871 -22.7272727272727

## 1 11 4 2 1 5

## -22.7142857142857 -22.7027027027027 -22.6923076923077 -22.6829268292683 -22.6744186046512 -22.6666666666667

## 4 8 2 4 5 3

## -22.6595744680851 -22.5 -22.3529411764706 -22.3404255319149 -22.3333333333333 -22.3255813953488

## 1 879 1 1 3 5

## -22.3170731707317 -22.3076923076923 -22.2972972972973 -22.2857142857143 -22.2727272727273 -22.258064516129

## 4 2 8 4 5 1

## -22.2413793103448 -22.2222222222222 -22.2115384615385 -22.2 -22.1875 -22.1739130434783

## 2 4 1 14 1 22

## -22.1428571428571 -22.125 -22.1052631578947 -22.0833333333333 -22.0588235294118 -22.03125

## 43 3 58 4 119 5

## -22.0212765957447 -22 -21.9767441860465 -21.9642857142857 -21.9512195121951 -21.9230769230769

## 1 168 5 1 4 219

## -21.9 -21.8918918918919 -21.875 -21.8571428571429 -21.8478260869565 -21.8181818181818

## 2 8 9 4 4 146

## -21.7857142857143 -21.7741935483871 -21.7647058823529 -21.75 -21.7241379310345 -21.7105263157895

## 7 1 1 37 2 4

## -21.7021276595745 -21.6666666666667 -21.6346153846154 -21.6279069767442 -21.6176470588235 -21.6

## 1 101 1 5 5 14

## -21.5853658536585 -21.5625 -21.5454545454545 -21.5384615384615 -21.5217391304348 -21.5

## 4 145 3 2 22 7

## -21.4864864864865 -21.4705882352941 -21.4285714285714 -21.3829787234043 -21.375 -21.3636363636364

## 8 1 409 1 3 5

## -21.3461538461538 -21.3333333333333 -21.3157894736842 -21.3 -21.2903225806452 -21.2790697674419

## 9 1 61 2 2 5

## -21.2727272727273 -21.25 -21.219512195122 -21.2068965517241 -21.195652173913 -21.1764705882353

## 3 134 3 2 4 119

## -21.1538461538462 -21.1363636363636 -21.1111111111111 -21.09375 -21.0810810810811 -21.0714285714286

## 2 26 4 5 8 7

## -21.063829787234 -21.0576923076923 -21.0483870967742 -21 -20.9375 -20.9302325581395

## 1 1 1 295 1 5

## -20.9210526315789 -20.9090909090909 -20.8928571428571 -20.8823529411765 -20.8695652173913 -20.859375

## 4 5 1 1 23 1

## -20.8536585365854 -20.8333333333333 -20.8064516129032 -20.7692307692308 -20.75 -20.7446808510638

## 3 73 3 222 2 1

## -20.7352941176471 -20.7272727272727 -20.7142857142857 -20.7 -20.6896551724138 -20.6756756756757

## 5 3 45 2 2 7

## -20.6666666666667 -20.625 -20.5970149253731 -20.5882352941176 -20.5813953488372 -20.5714285714286

## 1 188 1 1 4 5

## -20.5645161290323 -20.5555555555556 -20.5434782608696 -20.5263157894737 -20.5 -20.4878048780488

## 1 4 4 61 9 3

## -20.4807692307692 -20.4545454545455 -20.4347826086957 -20.4255319148936 -20.4166666666667 -20.4

## 1 152 1 1 5 14

## -20.390625 -20.3846153846154 -20.3731343283582 -20.3571428571429 -20.3333333333333 -20.3225806451613

## 1 1 1 353 1 3

## -20.3125 -20.2941176470588 -20.2702702702703 -20.25 -20.2325581395349 -20.2173913043478

## 1 120 6 37 4 25

## -20.1923076923077 -20.1818181818182 -20.1724137931034 -20.15625 -20.1492537313433 -20.1428571428571

## 8 3 4 6 1 6

## -20.1369863013699 -20.1315789473684 -20.1219512195122 -20.1063829787234 -20.1 -20.0806451612903

## 1 4 3 1 1 1

## -20 -19.9342105263158 -19.9315068493151 -19.9285714285714 -19.9253731343284 -19.921875

## 473 1 1 1 1 1

## -19.9090909090909 -19.9038461538462 -19.8913043478261 -19.8837209302326 -19.875 -19.8648648648649

## 3 1 5 4 2 6

## -19.8529411764706 -19.8387096774194 -19.8214285714286 -19.8 -19.7872340425532 -19.7826086956522

## 5 3 1 13 1 1

## -19.7727272727273 -19.7560975609756 -19.75 -19.746835443038 -19.7368421052632 -19.7260273972603

## 26 1 1 1 63 1

## -19.7142857142857 -19.7058823529412 -19.7014925373134 -19.6875 -19.6666666666667 -19.6551724137931

## 6 1 1 149 1 4

## -19.6428571428571 -19.6363636363636 -19.6153846153846 -19.5967741935484 -19.5833333333333 -19.5652173913043

## 7 3 230 1 5 25

## -19.5569620253165 -19.5454545454545 -19.5394736842105 -19.5348837209302 -19.5205479452055 -19.5

## 1 6 1 4 1 110

## -19.4776119402985 -19.468085106383 -19.4594594594595 -19.453125 -19.4444444444444 -19.4117647058824

## 1 2 6 1 4 124

## -19.390243902439 -19.375 -19.3670886075949 -19.3636363636364 -19.3548387096774 -19.3478260869565

## 1 10 1 3 3 1

## -19.3421052631579 -19.3333333333333 -19.3269230769231 -19.3150684931507 -19.2857142857143 -19.2537313432836

## 5 1 1 1 423 1

## -19.25 -19.2391304347826 -19.2352941176471 -19.2307692307692 -19.21875 -19.2

## 1 5 1 1 6 13

## -19.1860465116279 -19.1772151898734 -19.1666666666667 -19.1489361702128 -19.1447368421053 -19.1379310344828

## 4 1 72 2 1 4

## -19.1304347826087 -19.125 -19.1176470588235 -19.1129032258065 -19.1095890410959 -19.0909090909091

## 1 3 1 1 1 152

## -19.0714285714286 -19.0625 -19.0588235294118 -19.0540540540541 -19.0384615384615 -19.0298507462687

## 1 1 1 6 8 1

## -19.0243902439024 -19 -18.9873417721519 -18.984375 -18.9705882352941 -18.9473684210526

## 1 182 1 1 5 66

## -18.9285714285714 -18.9130434782609 -18.9041095890411 -18.9 -18.8888888888889 -18.8823529411765

## 7 25 1 1 4 1

## -18.8709677419355 -18.8659793814433 -18.8571428571429 -18.8461538461538 -18.8372093023256 -18.8297872340426

## 3 1 6 1 4 2

## -18.8235294117647 -18.8181818181818 -18.8059701492537 -18.7974683544304 -18.75 -18.7113402061856

## 2 3 1 1 369 1

## -18.7058823529412 -18.6986301369863 -18.695652173913 -18.6666666666667 -18.6585365853659 -18.6486486486486

## 1 1 1 1 1 6

## -18.6428571428571 -18.6363636363636 -18.6290322580645 -18.6206896551724 -18.6075949367089 -18.6

## 1 6 1 4 1 13

## -18.5869565217391 -18.5820895522388 -18.5714285714286 -18.5567010309278 -18.5526315789474 -18.5454545454545

## 6 1 46 1 5 3

## -18.5294117647059 -18.515625 -18.5106382978723 -18.5 -18.4931506849315 -18.4883720930233

## 127 1 2 9 1 4

## -18.4782608695652 -18.4615384615385 -18.4375 -18.4285714285714 -18.4177215189873 -18.4090909090909

## 1 235 1 6 1 27

## -18.4020618556701 -18.3870967741935 -18.375 -18.3582089552239 -18.3552631578947 -18.3529411764706

## 1 3 5 1 1 1

## -18.3333333333333 -18.3 -18.2926829268293 -18.2876712328767 -18.28125 -18.2727272727273

## 104 1 1 1 6 3

## -18.2608695652174 -18.25 -18.2474226804124 -18.2432432432432 -18.2352941176471 -18.2278481012658

## 26 1 1 6 2 1

## -18.2142857142857 -18.1914893617021 -18.1818181818182 -18.1764705882353 -18.1730769230769 -18.1578947368421

## 357 2 6 1 1 64

## -18.1451612903226 -18.1395348837209 -18.134328358209 -18.125 -18.1034482758621 -18.0927835051546

## 1 4 1 11 4 1

## -18.0882352941176 -18.0821917808219 -18.0769230769231 -18.046875 -18.0434782608696 -18.0379746835443

## 5 1 1 1 1 1

## -18 -17.9605263157895 -17.9411764705882 -17.9381443298969 -17.9347826086957 -17.9268292682927

## 321 1 2 1 6 1

## -17.9166666666667 -17.910447761194 -17.9032258064516 -17.8846153846154 -17.8767123287671 -17.8723404255319

## 5 1 3 8 1 2

## -17.8571428571429 -17.8481012658228 -17.8378378378378 -17.8260869565217 -17.8235294117647 -17.8125

## 45 1 6 1 1 158

## -17.7906976744186 -17.7857142857143 -17.7835051546392 -17.7777777777778 -17.7631578947368 -17.75

## 4 1 1 4 5 1

## -17.7272727272727 -17.7 -17.6923076923077 -17.6865671641791 -17.6785714285714 -17.6712328767123

## 153 1 1 1 1 1

## -17.6666666666667 -17.6612903225806 -17.6582278481013 -17.6470588235294 -17.6288659793814 -17.625

## 1 1 1 130 1 5

## -17.6086956521739 -17.5961538461538 -17.5862068965517 -17.578125 -17.5714285714286 -17.5657894736842

## 25 1 4 1 6 1

## -17.5609756097561 -17.5531914893617 -17.5 -17.4742268041237 -17.4705882352941 -17.4683544303797

## 1 2 235 1 1 1

## -17.4657534246575 -17.4626865671642 -17.4545454545455 -17.4418604651163 -17.4324324324324 -17.4193548387097

## 1 1 3 4 6 3

## -17.4 -17.3913043478261 -17.3684210526316 -17.3571428571429 -17.3529411764706 -17.34375

## 13 1 64 1 2 7

## -17.3333333333333 -17.319587628866 -17.3076923076923 -17.2941176470588 -17.2826086956522 -17.2784810126582

## 1 1 236 1 5 1

## -17.2727272727273 -17.2602739726027 -17.25 -17.2388059701493 -17.2340425531915 -17.2222222222222

## 6 1 41 1 2 4

## -17.2058823529412 -17.1951219512195 -17.1875 -17.1818181818182 -17.1774193548387 -17.1739130434783

## 6 1 1 3 1 1

## -17.1710526315789 -17.1649484536082 -17.1428571428571 -17.1176470588235 -17.109375 -17.1

## 1 1 420 1 1 1

## -17.093023255814 -17.0886075949367 -17.0833333333333 -17.0689655172414 -17.0588235294118 -17.0547945205479

## 4 1 5 4 2 1

## -17.0454545454545 -17.027027027027 -17.0192307692308 -17.0149253731343 -17.0103092783505 -17

## 27 6 1 1 1 188

## -16.9736842105263 -16.9565217391304 -16.9411764705882 -16.9354838709677 -16.9285714285714 -16.9230769230769

## 5 26 1 3 1 1

## -16.9148936170213 -16.9090909090909 -16.8987341772152 -16.875 -16.8556701030928 -16.8493150684932

## 2 3 1 205 1 1

## -16.8292682926829 -16.8181818181818 -16.8 -16.7910447761194 -16.7857142857143 -16.7763157894737

## 1 6 13 1 6 1

## -16.7647058823529 -16.75 -16.7441860465116 -16.7391304347826 -16.7307692307692 -16.7142857142857

## 128 1 4 1 9 6

## -16.7088607594937 -16.7010309278351 -16.6935483870968 -16.6666666666667 -16.6438356164384 -16.640625

## 1 1 1 106 1 1

## -16.6363636363636 -16.6304347826087 -16.6216216216216 -16.6071428571429 -16.5957446808511 -16.5882352941176

## 2 5 6 2 2 1

## -16.5789473684211 -16.5671641791045 -16.5625 -16.551724137931 -16.5384615384615 -16.5217391304348

## 64 1 1 4 1 1

## -16.5189873417722 -16.5 -16.4705882352941 -16.4634146341463 -16.4516129032258 -16.4423076923077

## 1 118 2 1 3 1

## -16.4383561643836 -16.4285714285714 -16.4117647058824 -16.40625 -16.3953488372093 -16.3815789473684

## 1 45 1 7 4 1

## -16.3636363636364 -16.3432835820896 -16.3333333333333 -16.3291139240506 -16.3235294117647 -16.304347826087

## 156 1 2 1 5 26

## -16.2857142857143 -16.2765957446809 -16.25 -16.2352941176471 -16.2328767123288 -16.2162162162162

## 7 2 148 1 1 6

## -16.2096774193548 -16.2 -16.1842105263158 -16.1764705882353 -16.171875 -16.1538461538462

## 1 13 5 2 1 238

## -16.1392405063291 -16.125 -16.1194029850746 -16.1111111111111 -16.0975609756098 -16.0909090909091

## 1 6 1 5 1 2

## -16.0869565217391 -16.0714285714286 -16.0588235294118 -16.046511627907 -16.0344827586207 -16.027397260274

## 1 357 1 4 4 1

## -16 -15.9868421052632 -15.9782608695652 -15.9677419354839 -15.9574468085106 -15.9493670886076

## 194 1 5 3 2 1

## -15.9375 -15.9090909090909 -15.9 -15.8955223880597 -15.8823529411765 -15.8695652173913

## 158 6 1 1 128 1

## -15.8653846153846 -15.8571428571429 -15.8333333333333 -15.8219178082192 -15.8181818181818 -15.8108108108108

## 1 7 73 1 2 6

## -15.7894736842105 -15.7692307692308 -15.7594936708861 -15.75 -15.7317073170732 -15.7258064516129

## 64 1 1 43 1 1

## -15.7142857142857 -15.7058823529412 -15.703125 -15.6976744186047 -15.6818181818182 -15.6716417910448

## 44 1 1 4 29 1

## -15.6666666666667 -15.6521739130435 -15.6428571428571 -15.6382978723404 -15.625 -15.6164383561644

## 2 24 1 2 13 1

## -15.6 -15.5921052631579 -15.5882352941176 -15.5769230769231 -15.5696202531646 -15.5555555555556

## 14 1 2 9 1 5

## -15.5454545454545 -15.5357142857143 -15.5294117647059 -15.5172413793103 -15.5 -15.4838709677419

## 2 2 1 4 8 4

## -15.46875 -15.4591836734694 -15.4545454545455 -15.4477611940298 -15.4411764705882 -15.4347826086957

## 7 1 6 1 5 1

## -15.4285714285714 -15.4166666666667 -15.4054054054054 -15.3947368421053 -15.3896103896104 -15.3846153846154

## 6 5 7 5 1 1

## -15.379746835443 -15.375 -15.3658536585366 -15.3571428571429 -15.3529411764706 -15.3488372093023

## 1 6 1 6 1 4

## -15.3333333333333 -15.3260869565217 -15.3191489361702 -15.3125 -15.3 -15.2941176470588

## 2 4 2 1 1 2

## -15.2884615384615 -15.2727272727273 -15.241935483871 -15.234375 -15.2272727272727 -15.2238805970149

## 1 2 1 1 1 1

## -15.2173913043478 -15.2142857142857 -15.1973684210526 -15.1948051948052 -15.1898734177215 -15.1764705882353

## 1 1 1 1 1 1

## -15 -14 -13 -12 -11 -10

## 1848 1877 1904 1918 1936 1957

## -9 -8 -7 -6 -5 -4

## 1975 2000 2028 2032 2049 2044

## -3 -2 -1

## 2045 2054 2052##

## -292 -291 -290 -289 -288 -287 -286 -285 -284 -283 -282 -281 -280 -279 -278 -277 -276 -275 -274 -273 -272 -271

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -270 -269 -268 -267 -266 -265 -264 -263 -262 -261 -260 -259 -258 -247 -246 -245 -244 -243 -242 -241 -240 -239

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 1 1 1 1

## -238 -237 -236 -235 -234 -233 -232 -195 -194 -193 -192 -191 -190 -189 -188 -187 -186 -185 -184 -183 -182 -181

## 1 1 1 1 1 1 1 2 2 2 1 1 1 1 1 1 1 1 1 1 1 1

## -180 -179 -178 -177 -176 -175 -167 -166 -165 -164 -163 -162 -161 -160 -159 -158 -157 -156 -155 -154 -153 -152

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2

## -151 -150 -149 -148 -147 -146 -145 -144 -143 -142 -141 -139 -138 -137 -136 -135 -134 -133 -132 -131 -130 -129

## 2 2 2 2 1 1 1 1 1 1 1 2 3 3 2 3 3 3 2 2 1 1

## -128 -127 -126 -125 -124 -123 -122 -121 -120 -119 -118 -117 -116 -115 -114 -113 -112 -111 -110 -109 -108 -107

## 1 1 1 2 1 1 1 1 2 2 2 3 2 2 2 2 2 2 2 2 2 2

## -106 -105 -104 -103 -102 -101 -100 -99 -98 -97 -96 -95 -94 -93 -92 -91 -90 -89 -88 -87 -86 -85

## 2 2 2 3 4 4 4 5 5 4 5 5 5 5 6 7 8 9 9 8 9 8

## -84 -83 -82 -81 -80 -79 -78 -77 -76 -75 -74 -73 -72 -71 -70 -69 -68 -67 -66 -65 -64 -63

## 10 12 12 12 12 13 14 15 17 18 21 22 22 22 21 25 25 25 24 23 25 26

## -62 -61 -60 -59 -58 -57 -56 -55 -54 -53 -52 -51 -50 -49 -48 -47 -46 -45 -44 -43 -42 -41

## 25 26 30 33 33 35 41 45 50 52 54 59 64 68 73 73 75 76 85 87 88 87

## -40 -39 -38 -37 -36 -35 -34 -33 -32 -31 -30 -29 -28 -27 -26 -25 -24 -23 -22 -21 -20 -19

## 98 106 113 129 153 183 217 268 327 426 550 700 1003 1216 1351 1473 1558 1600 1641 1685 1726 1774

## -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1

## 1801 1809 1833 1848 1877 1904 1918 1936 1957 1975 2000 2028 2032 2049 2044 2045 2054 2052##

## -50 -49 -48 -47 -46 -45 -44 -43 -42 -41 -40 -39 -38 -37 -36 -35 -34 -33 -32 -31 -30 -29

## 3 3 4 4 4 5 5 3 7 8 16 18 29 32 38 84 119 156 216 295 606 834

## -28 -27 -26 -25 -24 -23 -22 -21 -20 -19 -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8 -7

## 1516 1743 1873 2016 2051 2096 2112 2137 2142 2165 2166 2158 2162 2167 2145 2146 2159 2164 2163 2143 2116 2068

## -6 -5 -4 -3 -2 -1 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

## 2029 1958 1873 1732 1533 1286 958 732 565 428 360 295 265 234 202 187 171 160 151 142 134 124

## 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37

## 118 113 104 99 92 81 75 71 66 61 54 53 45 42 41 39 36 31 28 28 26 25

## 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59

## 23 22 21 19 18 17 16 15 14 13 13 14 13 12 11 10 10 9 9 7 6 6

## 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81

## 5 4 4 3 3 4 2 2 2 2 1 1 1 1 1 1 1 1 1 1 1 1

## 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125

## 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 126 127 128 129 130 131 132 133 160

## 1 1 1 1 1 1 1 1 1##

## -29 -28 -27 -26 -25 -24 -23 -22 -21 -20 -19 -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8

## 2055 2103 1957 2044 2108 2076 1247 2569 2066 2182 2024 2233 1906 2207 2170 2145 2146 2159 2164 2163 2143 2116

## -7 -6 -5 -4 -3 -2 -1

## 2068 2029 1958 1873 1732 1533 1286##

## FALSE TRUE

## 58508 6831## s3_daily$RCD_inferred[is.na(s3_daily$RCD_inferred_squished)]

## 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 105 106

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 125 126 127 128 129 130 131 132 133 160 66 67 68 69 104 -50 -49 -43

## 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 3 3 3

## 63 64 -48 -47 -46 61 62 65 -45 -44 60 58 59 -42 57 -41 55 56

## 3 3 4 4 4 4 4 4 5 5 5 6 6 7 7 8 9 9

## 53 54 52 51 47 48 50 46 49 45 44 43 42 41 40 39 38 37

## 10 10 11 12 13 13 13 14 14 15 16 17 18 19 21 22 23 25

## 36 34 35 33 32 31 30 29 28 27 26 25 24 23 22 21 20 19

## 26 28 28 31 36 39 41 42 45 53 54 61 66 71 75 81 92 99

## 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

## 104 113 118 124 134 142 151 160 171 187 202 234 265 295 360 428 565 732

## 0 <NA>

## 958 13910## s3_daily$RCD[is.na(s3_daily$RCD_squished)]

## -292 -291 -290 -289 -288 -287 -286 -285 -284 -283 -282 -281 -280 -279 -278 -277 -276 -275

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -274 -273 -272 -271 -270 -269 -268 -267 -266 -265 -264 -263 -262 -261 -260 -259 -258 -247

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -246 -242 -241 -240 -239 -238 -237 -236 -235 -234 -233 -232 -192 -191 -190 -189 -188 -187

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -186 -185 -184 -183 -182 -181 -180 -179 -178 -177 -176 -175 -167 -166 -165 -164 -163 -162

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -161 -160 -159 -158 -147 -146 -145 -144 -143 -142 -141 -130 -129 -128 -127 -126 -124 -123

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## -122 -121 -245 -244 -243 -195 -194 -193 -157 -156 -155 -154 -153 -152 -151 -150 -149 -148

## 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -139 -136 -132 -131 -125 -120 -119 -118 -116 -115 -114 -113 -112 -111 -110 -109 -108 -107

## 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## -106 -105 -104 -138 -137 -135 -134 -133 -117 -103 -102 -101 -100 -97 -99 -98 -96 -95

## 2 2 2 3 3 3 3 3 3 3 4 4 4 4 5 5 5 5

## -94 -93 -92 -91 -90 -87 -85 -89 -88 -86 -84 -83 -82 -81 -80 -79 -78 -77

## 5 5 6 7 8 8 8 9 9 9 10 12 12 12 12 13 14 15

## -76 -75 -74 -70 -73 -72 -71 -65 -66 -69 -68 -67 -64 -62 -63 -61 -60 -59

## 17 18 21 21 22 22 22 23 24 25 25 25 25 25 26 26 30 33

## -58 -57 -56 -55 -54 -53 -52 -51 -50 -49 -48 -47 -46 -45 -44 -43 -41 -42

## 33 35 41 45 50 52 54 59 64 68 73 73 75 76 85 87 87 88

## <NA>

## 23818## s3_daily$RCD_inferred_squished

## -29 -28 -27 -26 -25 -24 -23 -22 -21 -20 -19 -18 -17 -16 -15 -14 -13 -12

## 2055 2103 1957 2044 2108 2076 1247 2569 2066 2182 2024 2233 1906 2207 2170 2145 2146 2159

## -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1 <NA>

## 2164 2163 2143 2116 2068 2029 1958 1873 1732 1533 1286 20787##

## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

## 2093 2107 2122 2124 2151 2166 2174 2166 2160 2172 2174 2174 2172 2177 2168 2162 2165 2167 2169 2150 2141 2102

## 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44

## 2071 2007 1927 1787 1612 1349 976 775 619 492 421 343 311 262 230 208 186 169 158 152 146 137

## 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66

## 127 118 108 99 92 86 80 70 67 65 58 55 48 47 46 45 40 34 32 31 27 24

## 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88

## 21 21 21 19 17 14 14 14 13 12 11 12 13 13 12 10 10 9 9 9 8 8

## 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110

## 8 8 6 5 5 4 6 4 4 4 1 1 1 1 1 1 1 1 1 1 1 1

## 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1

## 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154

## 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

## 155 156 157 158 159 160 161 162 163 189

## 1 1 1 1 1 1 1 1 1 1days <- data.frame(

RCD = c(-28:-1, -29:-40),

FCD = c(1:40),

prc_stirn_b = c(.01, .01, .02, .03, .05, .09, .16, .27, .38, .48, .56, .58, .55, .48, .38, .28, .20, .14, .10, .07, .06, .04, .03, .02, .01, .01, .01, .01, rep(.01, times = 12)),

# rep(.01, times = 70)), # gangestad uses .01 here, but I think such cases are better thrown than kept, since we might simply have missed a mens

prc_wcx_b = c(.000, .000, .001, .002, .004, .009, .018, .032, .050, .069, .085, .094, .093, .085, .073, .059, .047, .036, .028, .021, .016, .013, .010, .008, .007, .006, .005, .005, rep(.005, times = 12))

)

# rep(NA_real_, times = 70)) # gangestad uses .005 here, but I think such cases are better thrown than kept, since we might simply have missed a mens

days = days %>% mutate(

fertile_narrow = if_else(between(RCD,-18, -14), mean(prc_stirn_b[between(RCD, -18, -14)], na.rm = T),

if_else(between(RCD, -11, -3), mean(prc_stirn_b[between(RCD,-11, -3)], na.rm = T), NA_real_)), # these days are likely infertile

fertile_broad = if_else(between(RCD,-21,-13), mean(prc_stirn_b[between(RCD,-21,-13)], na.rm = T),

if_else(between(RCD,-11,-3), mean(prc_stirn_b[between(RCD,-11,-3)], na.rm = T), NA_real_)), # these days are likely infertile

fertile_window = factor(if_else(fertile_broad > 0.1, if_else(!is.na(fertile_narrow), "narrow", "broad"),"infertile"), levels = c("infertile","broad", "narrow")),

premenstrual_phase = ifelse(between(RCD, -6, -1), TRUE, FALSE)

)## mutate: new variable 'fertile_narrow' with 3 unique values and 65% NA## new variable 'fertile_broad' with 3 unique values and 55% NA## new variable 'fertile_window' with 4 unique values and 55% NA## new variable 'premenstrual_phase' with 2 unique values and 0% NA# lh_days = days %>% mutate(

# DRLH =

# FCD

# - 1 # because FCD starts counting at 1

# - 15 # because ovulation happens on ~14.6 days after menstrual onset

# # + 1 # we already added 1 to the date of the LH surge above, as it happens 24-48 hours before ovulation

# ) %>% select(-FCD, -RCD_for_merge)

# from Jünger/Stern et al. 2018 Supplementary Material

# Day relative to ovulation Schwartz et al., (1980) Wilcox et al., (1998) Colombo & Masarotto (2000) Weighted average

lh_days <- tibble(

conception_risk_lh = c(0.00, 0.01, 0.02, 0.06, 0.16, 0.20, 0.25, 0.24, 0.10, 0.02, 0.02 ),

DRLH = c(-8, -7, -6, -5, -4, -3, -2, -1, 0, 1, 2)

) %>%

mutate(fertile_lh = conception_risk_lh/max(conception_risk_lh))## mutate: new variable 'fertile_lh' with 9 unique values and 0% NA# from blake et al. supplement (unweighted)

blake_meta <- tibble::tibble(DRLH = -10:6, CR = c(0,0,0.00267,

0.00998,

0.02600,

0.06180,

0.11600,

0.15917,

0.20717,

0.21633,

0.15667,

0.06540,

0.05250,

0.01550,

0.00300,

0.00000, 0))

blake_meta %>% left_join(lh_days) %>%

mutate(conception_risk_lh = na_if(conception_risk_lh, 0)) %>%

summarise(cor(conception_risk_lh, CR, use = 'pairwise.complete.obs'))## Joining, by = "DRLH"## left_join: added 2 columns (conception_risk_lh, fertile_lh)## > rows only in x 6## > rows only in y ( 0)## > matched rows 11## > ====## > rows total 17## mutate: changed one value (6%) of 'conception_risk_lh' (1 new NA)## summarise: now one row and one column, ungrouped## # A tibble: 1 x 1

## `cor(conception_risk_lh, CR, use = "pairwise.complete.obs")`

## <dbl>

## 1 0.938# blake and juenger values are very close, I'll use Juenger

s3_daily = s3_daily %>% left_join(lh_days, by = "DRLH") %>%

mutate(fertile_lh = if_else(is.na(fertile_lh) &

between(DRLH, -15, 15), 0, fertile_lh))## left_join: added 2 columns (conception_risk_lh, fertile_lh)## > rows only in x 76,906## > rows only in y ( 0)## > matched rows 2,343## > ========## > rows total 79,249## mutate: changed 3,262 values (4%) of 'fertile_lh' (3262 fewer NA)## select: dropped one variable (FCD)## left_join: added 6 columns (prc_stirn_b, prc_wcx_b, fertile_narrow, fertile_broad, fertile_window, …)## > rows only in x 25,790## > rows only in y ( 0)## > matched rows 53,459## > ========## > rows total 79,249## select: dropped one variable (FCD)names(rcd_squished) = paste0(names(rcd_squished), "_squished")

s3_daily = left_join(s3_daily, rcd_squished, by = c("RCD_squished_rounded" = "RCD_squished"))## left_join: added 6 columns (prc_stirn_b_squished, prc_wcx_b_squished, fertile_narrow_squished, fertile_broad_squished, fertile_window_squished, …)## > rows only in x 25,790## > rows only in y ( 11)## > matched rows 53,459## > ========## > rows total 79,249## select: dropped one variable (FCD)names(rcd_inferred_squished) = paste0(names(rcd_inferred_squished), "_inferred_squished")

s3_daily = left_join(s3_daily, rcd_inferred_squished, by = "RCD_inferred_squished")## left_join: added 6 columns (prc_stirn_b_inferred_squished, prc_wcx_b_inferred_squished, fertile_narrow_inferred_squished, fertile_broad_inferred_squished, fertile_window_inferred_squished, …)## > rows only in x 20,787## > rows only in y ( 11)## > matched rows 58,462## > ========## > rows total 79,249## select: dropped one variable (RCD)names(fcd_days) = paste0(names(fcd_days), "_forward_counted")

fcd_days = fcd_days %>% rename(FCD = FCD_forward_counted)## rename: renamed one variable (FCD)## left_join: added 6 columns (prc_stirn_b_forward_counted, prc_wcx_b_forward_counted, fertile_narrow_forward_counted, fertile_broad_forward_counted, fertile_window_forward_counted, …)## > rows only in x 16,148## > rows only in y ( 0)## > matched rows 63,101## > ========## > rows total 79,249## select: dropped one variable (FCD)## mutate: converted 'RCD' from integer to double (0 new NA)names(aware_luteal_squished) = paste0(names(aware_luteal_squished), "_aware_luteal")

s3_daily$DAL <- as.numeric(s3_daily$DAL)